Abstract

We solve a conjecture raised by Kapovitch, Lytchak and Petrunin in [KLP21] by showing that the metric measure boundary is vanishing on any \({{\,\textrm{RCD}\,}}(K,N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\) without boundary. Our result, combined with [KLP21], settles an open question about the existence of infinite geodesics on Alexandrov spaces without boundary raised by Perelman and Petrunin in 1996.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and main results

We study the metric measure boundary of noncollapsed spaces with Ricci curvature bounded from below. We work within the framework of \({{\,\textrm{RCD}\,}}(K,N)\) spaces, a class of infinitesimally Hilbertian metric measure spaces verifying the synthetic Curvature-Dimension condition \({{\,\textrm{CD}\,}}(K,N)\) from [S06a, S06b, LV09]. We assume the reader to be familiar with the \({{\,\textrm{RCD}\,}}\) theory addressing to [AGS14, G15, AGMR15, AGS15, EKS15, AMS19, CM21] for the basic background. Further references for the statements relevant to our purposes will be pointed out subsequently in the note.

Given an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space \((X,{\textsf{d}}, {\mathscr {H}}^{N})\) and \(r>0\), we introduce

where \({\mathcal {V}}_r\) is the deviation measure in the terminology of [KLP21], \({\mathscr {H}}^N\) is the N-dimensional Hausdorff measure, \(\omega _N\) is the volume of the unit ball in \({\mathbb {R}}^N\) and \(B_r(x)\) denotes the open ball of radius r centered at \(x\in X\).

If \((X,{\textsf{d}})\) is isometric to a smooth N-dimensional Riemannian manifold (M, g) without boundary, it is a classical result that

at any point x, where \(\textrm{Scal}(x)\) denotes the scalar curvature of (M, g) at x. Then it is a standard computation to show that

when \((X,{\textsf{d}})\) is isometric to a smooth Riemannian manifold with boundary \(\partial X\). Here \({\mathscr {H}}^{N-1}\) is the \((N-1)\)-dimensional volume measure and \(\gamma (N)>0\) is a universal constant depending only on the dimension (see (1.5) below for the explicit expression).

This observation motivates the following definition, see [KLP21, Definition 1.5].

Definition 1.1

We say that an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\) has locally finite metric measure boundary if the family of Radon measures \(\mu _r\) as in (1.1) is locally uniformly bounded for \(0<r\le 1\). If there exists a weak limit \(\mu =\lim _{r\downarrow 0}\mu _r\), then we shall call \(\mu \) the metric measure boundary of \((X,{\textsf{d}},{\mathscr {H}}^N)\). Moreover, if \(\mu =0\), we shall say that X has vanishing metric measure boundary.

The above notion of metric measure boundary is analytic in nature. There have been proposals of more geometric notions of boundary in [DPG18, KM19], based on tangent cones. The notions of boundary given in [DPG18, KM19] may a priori differ, however, they are conjecturally equivalent. Moreover, in [BNS22] it was shown that the boundary is empty in the sense of [DPG18] if and only if it is empty in the sense of [KM19]. Thus, the condition “empty boundary \(\partial X=\emptyset \)” is independent of the chosen (geometric) definition. One of the main results of the present paper, Theorem 1.2 below, states that if the geometric boundary is empty, then also the metric measure boundary is vanishing. Let us now recall the basic definitions in order to give the statement precisely.

Following [DPG18], the boundary of an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\) is defined as the closure of the top dimensional singular stratum

When \({\mathcal {S}}^{N-1}{\setminus }{\mathcal {S}}^{N-2}=\emptyset \), we say that X has no boundary. We refer to [BNS22] (see also the previous [DPG18, KM19]) for an account on regularity and stability of boundaries of \({{\,\textrm{RCD}\,}}\) spaces.

Our goal is to prove the following.

Theorem 1.2

Let \(N\ge 1\) and \((X,{\textsf{d}},{\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space. Let \(p\in X\) be such that \(B_2(p)\cap \big ( {\mathcal {S}}^{N-1}{\setminus }{\mathcal {S}}^{N-2} \big )= \emptyset \) and \({\mathscr {H}}^N(B_1(p)) \ge v>0\), then

and \(\lim _{r\downarrow 0} |\mu _r|(B_1(p)) = 0\). In particular, if X has empty boundary then it has vanishing metric measure boundary.

The effective bound (1.4) is new even when \((X,{\textsf{d}})\) is isometric to a smooth N-dimensional manifold satisfying \({{\,\textrm{Ric}\,}}\ge -(N-1)\). However, the most relevant outcome of Theorem 1.2 is the second conclusion, showing that \({{\,\textrm{RCD}\,}}\) spaces \((X,{\textsf{d}},{\mathscr {H}}^N)\) with empty boundary have vanishing metric measure boundary. This implication was unknown even in the setting of Alexandrov spaces, where it was conjectured to be true by Kapovitch–Lytchak–Petrunin [KLP21]. By the compatibility between the theory of Alexandrov spaces with sectional curvature bounded from below and the \({{\,\textrm{RCD}\,}}\) theory, see [P11] and the subsequent [ZZ10], Theorem 1.2 fully solves this conjecture.

We are able to control the metric measure boundary also for \({{\,\textrm{RCD}\,}}(-(N-1),N)\) spaces \((X,{\textsf{d}},{\mathscr {H}}^N)\) with boundary under an extra assumption. The latter is always satisfied on Alexandrov spaces with sectional curvature bounded below and on noncollapsed limits of manifolds with convex boundary and Ricci curvature uniformly bounded below.

We shall denote

where \((0,s)\in {\mathbb {R}}^{N-1}\times {\mathbb {R}}_+\). Moreover, we set

Theorem 1.3

Let \((X,{\textsf{d}},{\mathscr {H}}^N)\) be either an Alexandrov space with (sectional) curvature \(\ge -1\) or a noncollapsed limit of manifolds with convex boundary and \({{\,\textrm{Ric}\,}}\ge -(N-1)\) in the interior. Let \(p\in X\) be such that \({\mathscr {H}}^N(B_1(p))\ge v>0\). Then

Moreover,

where \(\gamma (N)>0\) is the constant defined in (1.5).

In other words, the metric measure boundary coincides with the boundary measure. We refer to Section 6 for a more general statement.

On a complete Riemannian manifold without boundary all the geodesics extend for all times, while in the presence of boundary the amount of geodesics that terminate (on the boundary) is measured by its size. This is of course too much to hope for on general metric spaces. However, as shown in [KLP21, Theorem 1.6], when the metric measure boundary is vanishing on an Alexandrov space with sectional curvature bounded from below, then there are many infinite geodesics. We refer to [KLP21, Section 3] for the definitions of tangent bundle, geodesic flow and Liouville measure in the setting of Alexandrov spaces. An immediate application of Theorem 1.2, when combined with [KLP21, Theorem 1.6], is the following.

Theorem 1.4

Let \((X,{\textsf{d}})\) be an Alexandrov space with empty boundary. Then almost each direction of the tangent bundle TX is the starting direction of an infinite geodesic. Moreover, the geodesic flow preserves the Liouville measure on TX.

In particular, the above gives an affirmative answer to an open question raised by Perelman–Petrunin [PP96] about the existence of infinite geodesics on Alexandrov spaces with empty topological boundary.

1.1 Outline of proof.

The main challenge in the study of the metric measure boundary is to control the mass of inner balls, i.e. balls located sufficiently far away from the boundary. This is the aim of Theorem 1.2, whose proof occupies the first five sections of this paper and requires several new ideas. Once Theorem 1.2 is established, Theorem 1.3 follows from a careful analysis of boundary balls. The latter is outlined in Section 6.

Let us now describe the proof of Theorem 1.2. Given a ball \(B_1(p)\subset X\) such that \(B_2(p)\cap \partial X = \emptyset \), we aim at finding uniform bounds on the family of approximating measures

and at showing that

Morally, (1.9) amounts to say that the identity

holds in average on \(B_1(p)\). The Bishop-Gromov inequality says that the limit

exists for all points. Moreover, its value is 1 if and only if x is a regular point, i.e. its tangent cone is Euclidean. In particular the limit is different from 1 only at singular points, which are a set of Hausdorff dimension less than \((N-2)\) if there is no boundary. This is a completely non trivial statement, although now classical, as it requires the volume convergence theorem and the basic regularity theory for noncollapsed spaces with lower Ricci bounds [C97, CC97, DPG18]. Analogous statements were known for Alexandrov spaces with curvature bounded from below since [BGP92].

The proof of (1.8) and (1.9) is based on three main ingredients:

-

(1)

a new quantitative volume convergence result via \(\delta \)-splitting maps, see Proposition 3.2;

-

(2)

an \(\varepsilon \)-regularity theorem, see Theorem 2.1, stating (roughly) that for balls which are sufficiently close to the Euclidean ball in the Gromov-Hausdorff topology, the approximating measure \(\mu _r\) as in (1.1) is small;

-

(3)

a series of quantitative covering arguments.

The first two ingredients are the main contributions of the present work. We believe that they are of independent interest and have a strong potential for future applications in the study of spaces with Ricci curvature bounded below.

The ingredient (3) comes from the recent [BNS22], see Theorem 2.2 for the precise statement and [JN16, CJN21, KLP21, LN20] for earlier versions in different contexts. It is used to globalize local bounds obtained out of (2) by summing up good scale invariant bounds on almost Euclidean balls.

Among the main other tools that we borrow from the existing literature there are: the existence of harmonic “almost splitting” functions with \(L^2\)-Hessian bounds on almost Euclidean balls (see [BPS19, BNS22] after [CC96, CC97, CN15, CJN21]), the second order differential calculus for \({{\,\textrm{RCD}\,}}\) spaces (see [G18]), and the boundary regularity theory for \({{\,\textrm{RCD}\,}}(K,N)\) spaces endowed with the Hausdorff measure \({\mathscr {H}}^N\) (see in particular [BNS22, Theorem 1.2, Theorem 1.4, Theorem 8.1]).

1.1.1 Quantitative volume convergence.

The starting point of our analysis is (1). It provides a quantitative control on the volume of almost Euclidean balls, i.e. balls \(B_1(p)\subset X\) such that

in terms of \(\delta \)-splitting maps \(u:B_5(p)\rightarrow {\mathbb {R}}^N\). The latter are integrally good approximations of the canonical coordinates of \({\mathbb {R}}^N\) satisfying

see [CC96, CC97, CN15, CJN21] for the theory on smooth manifolds and Ricci limit spaces and the subsequent [BNS22] for the present setting. The key inequality proven in Proposition 3.2 reads as

at any regular point \(x\in B_5(p)\), for any \(r<5\). The term appearing in the right hand side measures to what extent \(u:B_5(p)\rightarrow {\mathbb {R}}^N\) well-approximates the Euclidean coordinates at any scale \(r\in (0,5)\) around x.

In order to prove (1.14), we use the components of the splitting map to construct an approximate solution of the equations

with \(r\ge 0\) and \(r(x)=0\). The approximate solution is obtained as \(r^2:=\sum _iu_i^2\), after normalizing so that \(u(x)=0\), and the right hand side in (1.14) controls the precision of this approximation, see Lemma 3.7. Then the idea is that when \({{\,\textrm{Ric}\,}}\ge 0\) the existence of a solution of (1.15) would force the volume ratio to be constant along scales. In Lemma 3.6 we prove an effective version of this where errors are taken into account quantitatively.

We remark that, following the proofs of the volume convergence in [C97, CC00, C01], one would get an estimate

for some \(\alpha (N)<1\), while for our applications it is fundamental to have a linear dependence at the right hand side. This is achieved by estimating the derivative at any scale,

see Corollary 3.8, and then integrating with respect to the scale. The improved dependence comes at the price of considering a multi-scale object at the right hand side.

1.1.2 A new \(\varepsilon \)-regularity theorem.

Let us now outline the ingredient (2). The \(\varepsilon \)-regularity theorem, Theorem 2.1, amounts to show that the scale invariant volume ratio in (1.11) converges to 1 at the quantitative rate o(r) in average on a ball \(B_{10}(p)\) which is sufficiently close to the Euclidean ball \(B^{{\mathbb {R}}^N}_{10}(0)\subset {\mathbb {R}}^N\) in the Gromov-Hausdorff sense.

In order to prove it, we employ the quantitative volume bound (1.14). There are two key points to take into account dealing with harmonic splitting maps in the present setting:

-

(a)

they cannot be bi-Lipschitz in general, as they do not remain \(\delta \)-splitting maps when restricted to smaller balls \(B_r(x)\subset B_5(p)\),

-

(b)

they have good \(L^2\) integral controls on their Hessians.

This is in contrast with distance coordinates in Alexandrov geometry, that are biLipschitz but have good controls only on the total variation of their measure valued Hessians, see [Per95]. On the one hand, (a) makes controlling the metric measure boundary much more delicate than in the Alexandrov case. On the other hand, (b) is where the crucial gain with respect to the previous [KLP21] appears. Indeed, the \(L^p\) integrability for \(p\ge 1\) allows to show that the metric measure boundary cannot concentrate on a set negligible with respect to \({\mathscr {H}}^N\). At this point, it will be sufficient to prove that the rate of convergence to 1 in (1.11) is o(r) at \({\mathscr {H}}^N\)-a.e. point.

The key observation to deal with (a) is that, even though a \(\delta \)-splitting map can degenerate, it remains quantitatively well behaved away from a set \(E\subset B_5(p)\) for which there exists a covering

and \(\delta '\rightarrow 0\) as \(\delta \rightarrow 0\). Moreover, on \(B_5(p){\setminus } E\) the splitting map becomes polynomially better and better when restricted to smaller balls, after composition with a linear transformation close to the identity in the image. Namely there exists a linear application \(A_x:{\mathbb {R}}^N\rightarrow {\mathbb {R}}^N\) with \(\left|A_x-\textrm{Id}\right|\le C(N)\delta '\) for which, setting \(v:=A_x\circ u:B_5(p)\rightarrow {\mathbb {R}}^N\), it holds

for some integrable function \(f:B_5(p){\setminus } E\rightarrow [0,\infty )\). The strategy is borrowed from [BNS22] (see also the previous [CC00]), it is based on a weighted maximal function argument and a telescopic estimate, building on top of the Poincaré inequality, and it heavily exploits the \(L^2\)-Hessian bounds for splitting maps. The small content bound (1.18) allows the construction to be iterated on the bad balls \(B_{r_i}(x_i)\) and the results to be summed up into a geometric series.

In order to control the approximating measure \(\mu _r\) on almost Euclidean balls, it is enough to plug (1.19) into the quantitative volume bound (1.14).

To prove that the metric measure boundary is vanishing we need to show that at \({\mathscr {H}}^N\)-almost any point it is possible to slightly perturb the map v above so that, morally, \(f(x)=0\). To this aim we perturb the splitting function v at the second order so that, roughly speaking, it has vanishing Hessian at a fixed point x. The idea is to use a quadratic polynomial in the components of v to make the second order terms in the Taylor expansion of v at x vanish. However its implementation is technically demanding and it requires the second order differential calculus on \({{\,\textrm{RCD}\,}}\) spaces developed in [G18]. The construction is of independent interest and it plays the role of [KLP21, Lemma 6.2] (see also [Per95]) in the present setting.

2 Metric measure boundary and regular balls

The aim of this section is to prove Theorem 1.2 by assuming the following \(\varepsilon \)-regularity theorem. The latter provides effective controls on the boundary measure for regular balls. Here and in the following, we say that a ball \(B_r(p)\) of an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space is \(\delta \)-regular if

Theorem 2.1

(\(\varepsilon \)-regularity). For every \(\varepsilon >0\) and \(N\in {\mathbb {N}}_{\ge 1}\), there exists \(\delta (N,\varepsilon )>0\) such that for all \(\delta <\delta (N,\varepsilon )\) the following holds. If \((X,{\textsf{d}},{\mathscr {H}}^N)\) is an \({{\,\textrm{RCD}\,}}(-\delta (N-1),N)\) space, \(p\in X\), and \(B_{10}(p)\) is \(\delta \)-regular, then

Moreover, \(\left|\mu _r\right|(B_1(p))\rightarrow 0\) as \(r\downarrow 0\).

2.1 Proof of Theorem 1.2.

We combine the \(\varepsilon \)-regularity result Theorem 2.1 with the quantitative covering argument [BNS22, Theorem 5.2]. We also refer the reader to the previous works [JN16, CJN21] where this type of quantitative covering arguments originate from, and to [LN20, KLP21] for similar results in the setting of Alexandrov spaces.

We recall that \(B_{r}(p)\) is said to be a \(\eta \)-boundary ball provided

where we denoted by \({\mathbb {R}}^N_+\) the Euclidean half-space of dimension N with canonical metric.

Theorem 2.2

(Boundary-Interior decomposition theorem). For any \(\eta >0\) and \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\) with \(p\in X\) such that \({\mathscr {H}}^N(B_1(p))\ge v\), there exists a decomposition

such that the following hold:

-

i)

the balls \(B_{20r_a}(x_a)\) are \(\eta \)-boundary balls and \(r_a^2\le \eta \);

-

ii)

the balls \(B_{20r_b}(x_b)\) are \(\eta \)-regular and \(r_b^2\le \eta \);

-

iii)

\({\mathscr {H}}^{N-1}(\tilde{{\mathcal {S}}})=0\);

-

iv)

\(\sum _br_b^{N-1}\le C(N,v,\eta )\);

-

v)

\(\sum _ar_a^{N-1}\le C(N,v)\).

We point out that the statement of Theorem 2.2 is slightly different from the original one in [BNS22, Theorem 5.2] as we claim that the balls \(B_{20r_b}(x_b)\) are \(\eta \)-regular, rather than considering the balls \(B_{2r_b}(x_b)\). This minor variant follows from the very same strategy.

Let us now prove the effective bound (1.4). Fix \(\eta <1/4\). We apply Theorem 2.2 to find the cover

where \({\mathscr {H}}^{N-1}(\tilde{{\mathcal {S}}})=0\), the balls \(B_{20r_b}(x_b)\) are \(\eta \)-regular with \(r_b^2\le \eta \) for any b, and

Notice that boundary balls do not appear in the decomposition as we are assuming that

Indeed, any boundary ball intersects \(\partial X\) as a consequence of [BNS22, Theorem 1.2]. Hence if a boundary ball appears in the decomposition, then \(B_{r_a}(x_a)\cap B_1(p)\ne \emptyset \) and \(B_{r_a}(x_a)\subset B_2(p)\), contradicting (2.7).

We fix \(\varepsilon =1/10\) and choose \(\eta : = \delta (N,1/10)\) given by Theorem 2.1. Then we estimate

by distinguishing two cases: if \(r<r_b\), then the scale invariant version of Theorem 2.1 applies yielding

If \(r>r_b\), then it is elementary to estimate

The combination of (2.6), (2.8), (2.9) and (2.10) shows that

as we claimed.

We finally prove that \(|\mu _r|(B_1(p))\rightarrow 0\) as \(r\downarrow 0\) by employing (the scaling invariant version of) (2.11). We appeal once more to the covering \(\{B_{r_b}(x_b)\}_{b\in {\mathbb {N}}}\). For any \(M>1\) we write

Thanks to (2.11), we can estimate

By using that \(|\mu _r(B_t(x))|\rightarrow 0\) as \(r\downarrow 0\) when \(B_t(x)\) is a \(\delta (N,1/10)\)-regular ball (see Theorem 2.1), we get

The sought conclusion follows by combining (2.12), (2.13), (2.14) and sending \(M\rightarrow \infty \).

3 Quantitative volume convergence via splitting maps

It is a classical fact [CC96, C97, C01, DPG18, BNS22] that for any \(\varepsilon >0\) there exists \(\delta =\delta (\varepsilon ,N)>0\) such that the following holds: If \((X,{\textsf{d}},{\mathfrak {m}})\) is an \({{\,\textrm{RCD}\,}}(-\delta ^2(N-1),N)\) space and \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) is a \(\delta ^2\)-splitting map (see Definition 3.1 below), then

The main result of this section is a quantitative version of (3.1) where \(\varepsilon \) is estimated explicitly in terms of C(N) and a power of \(\delta \). Before stating it, we recall the definition of \(\delta \)-splitting map and we introduce the relevant terminology.

Given an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\), \(p\in X\), and a harmonic map \(u:B_{10}(p) \rightarrow {\mathbb {R}}^N\), we define \({\mathcal {E}}:B_{10}(p)\rightarrow [0,\infty )\) by

Definition 3.1

Let \((X,{\textsf{d}},{\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space and fix \(p\in X\). We say that a harmonic map \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) is a \(\delta \)-splitting map provided

We refer to [CN15, CJN21, BNS22] for related results about harmonic splitting maps on spaces with lower Ricci bounds.

Proposition 3.2

(Quantitative volume convergence). For every \(N\in {\mathbb {N}}_{\ge 1}\), there exists a constant \(C(N)>0\) such that the following holds. Let \((X,{\textsf{d}},{\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space. Let \(p\in X\) and let \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) be a harmonic \(\delta \)-splitting map, for some \(\delta \le \delta (N)\). Then for any \(x\in B_4(p)\) it holds

for any \(0<r<1\).

Remark 3.3

In particular, the above is a quantitative version of the classical volume \(\varepsilon \)-regularity theorem for almost Euclidean balls, where the closeness to the Euclidean model can be quantified in terms of the best splitting map \(u:B_4(p)\rightarrow {\mathbb {R}}^N\).

The elliptic regularity for harmonic functions on \({{\,\textrm{RCD}\,}}(-(N-1),N)\) spaces guarantees that any \(\delta \)-splitting map \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) as above is C(N)-Lipschitz on \(B_{9}(p)\).

Moreover the map u satisfies the following sharp Lipschitz bound and \(L^2\)-Hessian bound:

We refer to [HP22, Lemma 4.3] for the proof of the sharp Lipschitz bound with a variant of an argument originating in [CN15]. The \(L^2\)-Hessian bound can be easily obtained integrating the Bochner’s inequality with Hessian term against a good cut-off function and employing (3.3); this argument originated in [CC96] (see also [BPS19] for the implementation in the \({{\,\textrm{RCD}\,}}\) setting). We refer to [G18] for the relevant terminology and background about second order calculus on \({{\,\textrm{RCD}\,}}\) spaces.

Remark 3.4

More in general, if \((X,{\textsf{d}},{\mathfrak {m}})\) is an \({{\,\textrm{RCD}\,}}(-\delta ^2(N-1),N)\) space, and there exists a \(\delta \)-splitting map \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\), then \({\mathfrak {m}}={\mathscr {H}}^N\) up to multiplicative constants, i.e. the space is noncollapsed, see [DPG18, H19, BGHX21].

3.1 Volume convergence and approximate distance.

We fix an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\) and a point \(x\in X\). We consider a C(N)-Lipschitz function

belonging to the domain of the Laplacian on \(B_2(x)\). It is well-known that, if the lower Ricci bound is reinforced to nonnegative Ricci curvature, and

then \(B_1(x)\) is a metric cone and \(r(x)={\textsf{d}}(x,p)\), see [CC96, DPG16]. In particular

The next result provides a quantitative control on the derivative of the volume ratio in terms of suitable norms of the error terms in (3.8), \(|\nabla r|^2-1\) and \(\Delta r^2 - 2N\). In its proof, as well as several times in the paper, we will make use of the coarea formula. Let us recall the statement in the simplified form we will need (we refer the reader to [M03, Proposition 4.2], and to [ABS19, BPS19] for the representation formula for the perimeter measure in terms of Hausdorff measures).

Theorem 3.5

(Coarea formula). Let \((X,{\textsf{d}}, {\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space for some \(N\ge 1\). Let \(v:X\rightarrow [0,\infty )\) be the distance function from a compact set \(E\Subset X\), i.e. \(v(\cdot )={\textsf{d}}(E, \cdot )\). Then \(\{v>r\}\) has finite perimeter for \({\mathcal {L}}^1\)-a.e. \(r>0\) and, for every Borel function \(f:X\rightarrow [0,\infty ]\), it holds:

Lemma 3.6

For \({\mathscr {L}}^1\)-a.e. \(0<t<1\) it holds

In particular, if \(x\in X\) is a regular point, it holds that for every \(t\in (0,1)\),

Proof

For any \(x\in X\), the function

is locally Lipschitz and differentiable at every \(t\in (0,\infty )\). Moreover,

for a.e. \(t\in (0,\infty )\), as a consequence of the coarea formula (3.10). We also notice that \({\mathscr {H}}^{N-1}(\partial B_t(x))={{\,\textrm{Per}\,}}(B_t(x))\) for a.e. \(t\in (0,\infty )\) and that

where the last estimate is a direct consequence of (3.13) and Bishop-Gromov inequality [S06b, Theorem 2.3] (see also [KL16, Theorem 5.2]).

For a.e. \(t\in (0,1)\) it holds

since \({\mathscr {H}}^N\)-a.e. on X it holds that \(\left|\nabla {\textsf{d}}_x\right|=1\).

Let us estimate I in (3.15):

Away from a further \({\mathscr {L}}^1\)-negligible set of radii \(t\in (0,1)\), we can estimate II with the Gauss-Green formula from [BPS19], after recalling that the exterior unit normal of \(B_t(x)\) coincides \({\mathscr {H}}^{N-1}\)-a.e. with \(\nabla {\textsf{d}}_x\), see [BPS21, Proposition 6.1]. We obtain

The combination of (3.16) and (3.17) together with (3.15) proves that

Hence, combining (3.13) and (3.14) with the last estimate, we get

The second conclusion in the statement follows by integrating the first one, as the function

is locally Lipschitz and limits to 1 as \(s\downarrow 0\). \(\square \)

3.2 Proof of Proposition 3.2.

Let \(u:B_{10}(p) \rightarrow {\mathbb {R}}^N\) be a \(\delta \)-splitting map. Fix \(x\in B_2(p)\). Up to the addition of some constant that does not affect the forthcoming statements, we can assume that \(u(x) =0\) and define

We estimate the gap between r and the distance function from x and between \(\Delta r^2\) and 2N in terms of the quantity \({\mathcal {E}}\) introduced in (3.2).

Lemma 3.7

The following inequalities hold \({\mathscr {H}}^N\)-a.e. in \(B_8(p)\):

Proof

The first conclusion follows from the sharp Lipschitz bound (3.5). Indeed,

To show (3.20) we employ the identities

that can be obtained via the chain rule taking into account that \(\Delta u=0\), together with some elementary algebraic manipulations. \(\square \)

Corollary 3.8

Under the same assumptions and with the same notation above, for \({\mathscr {L}}^1\)-a.e. \(0<t<1\) it holds that

Moreover, if \(x\in X\) is regular, then for any \(0<r<1\) it holds

Proof

In order to prove (3.21), it is sufficient to employ (3.11) in combination with Lemma 3.7. Indeed, the bound for the first two summands at the right hand side of (3.11) follows directly from (3.20). In order to bound the last summand we notice that, thanks to the sharp Lipschitz estimate (3.5) applied on the ball \(B_{4t}(x)\),

for any \(y\in B_t(x)\), hence

The estimate (3.22) follows by integrating (3.21) in t. Indeed, integrating by parts in t and using the coarea formula (3.10), we obtain

By using the Bishop-Gromov inequality, we estimate

The claimed bound (3.22) follows then by integrating (3.21) in t, taking into account (3.24) and (3.25). \(\square \)

Given Corollary 3.8, to conclude the proof of Proposition 3.2 it is enough to estimate the negative part of

This goal can be easily achieved using the Bishop-Gromov inequality, arguing as in [KLP21]:

where \(v_{-1,N}(r)\) is the volume of the ball of radius r in the model space of constant sectional curvature \(-1\) and dimension \(N\in {\mathbb {N}}\).

Using the well-known expansion of \(v_{-1,N}(r)\) around 0 and the Bishop-Gromov inequality, we deduce that

In conclusion, the combination of (3.22) with (3.26) and (3.27) proves the following:

4 Second order corrections

In order to prove that the metric measure boundary is vanishing directly through the quantitative volume estimate (cf. Proposition 3.2) we would need to build \(\delta \)-splitting maps whose Hessian is zero, in suitable sense, on a big set. This seems to be definitely hopeless, even on general smooth Riemannian manifolds, as the gradients of the components of the splitting map would be parallel vector fields. In order to overcome this issue we argue as follows:

-

(i)

First, we show that the metric measure boundary is absolutely continuous with respect to \({\mathscr {H}}^N\); this is done in Section 5 below.

-

(ii)

In a second step, we show that the density of the boundary measure with respect to \({\mathscr {H}}^N\) is zero almost everywhere; this will be an outcome of Proposition 4.1 in this section.

We remark that after establishing (i), the vanishing of the metric measure boundary for Alexandrov spaces with empty boundary would follow directly from [KLP21, Theorem 1.7].

In order to prove (ii), a key step is to build maps whose Hessian vanishes at a fixed point. In order to do so, we will allow for some extra flexibility on the \(\delta \)-splitting map. More precisely, for \({\mathscr {H}}^N\)-a.e. \(x\in X\) we can build an almost \(\delta \)-splitting \(u: B_{10}(p)\rightarrow {\mathbb {R}}^N\), meaning that \(\Delta u\) is not necessarily zero in a neighbourhood of x but rather converging to 0 at sufficiently fast rate at x, that satisfies

The construction of these maps is of independent interest and pursued in subsection 4.1.

Proposition 4.1

Let \((X,{\textsf{d}},{\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space. Then for \({\mathscr {H}}^N\)-a.e. \(x\in X\) it holds

Remark 4.2

The volume convergence and the classical regularity theory imply that

An improved convergence rate \(o(r^{\alpha })\), for some \(\alpha =\alpha (N)<1\) should follow from the arguments in [C97, CC97, CC00] for noncollapsed Ricci limit spaces. More precisely, in [CC00, Section 3], it was explicitly observed that one can obtain a rate of convergence for the scale invariant Gromov-Hausdorff distance between balls \(B_r(x)\) and Euclidean balls on a set of full measure. It seems conceivable that, along those lines, one can also obtain

for some \(\alpha =\alpha (N)<1\), for \({\mathscr {H}}^N\)-a.e. x. However, to the best of our knowledge, the existence of a single point where the o(r) volume convergence rate (4.2) holds is new even for Ricci limit spaces. This improvement will play a pivotal role in the analysis of the metric measure boundary.

The proof of Proposition 4.1 is based on a series of auxiliary results and it is postponed to the end of the section. The strategy is to apply Lemma 3.6 to a different function r defined out of the new coordinates built in subsection 4.1. In subsection 4.2 we check that r, \(|\nabla r|^2 - 1\) and \(\Delta r^2 - 2N\) enjoy all the needed asymptotic estimates.

4.1 \(\delta \)-splitting maps with vanishing Hessian at a reference point.

The almost \(\delta \)-splitting map with vanishing Hessian at a given point is built in Lemma 4.5. The key step in the construction is provided by Proposition 4.3 below.

Proposition 4.3

For any \(\varepsilon >0\), if \(\delta \le \delta (N,\varepsilon )\) the following property holds. Given an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) m.m.s \((X,{\textsf{d}},{\mathscr {H}}^N)\), \(p\in X\), and a harmonic \(\delta \)-splitting map \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) there exists a set \(E\subset B_1(p)\) such that the following hold:

-

(i)

\({\mathscr {H}}^N(B_1(p){\setminus } E)\le \varepsilon \);

-

(ii)

for any \(x\in E\) there exists an \(N\times N\) matrix \(A_x\) such that \(\left|A_x-\textrm{Id}\right|\le \varepsilon \) and the map \(u^x:=A_x\circ u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) verifies

-

(a)

\(\nabla u^x_i(x)\cdot \nabla u^x_j(x)=\delta _{ij}\) and x is a Lebesgue point of \(\nabla u^x_i(\cdot )\cdot \nabla u^x_j(\cdot ),\) for all \(i, j=1,\dots , N\), i.e.

(4.5)

(4.5) -

(b)

for any \(0<r<1\);

for any \(0<r<1\);

-

(a)

-

(iii)

for any \(x\in E\) and for any \(k=1,\dots , N\) there exist coefficients \(\alpha ^k_{ij}\) with \(\alpha ^k_{ij}=\alpha ^k_{ji}\) for any i, j, k, such that it holds

(4.6)

(4.6)

We state and prove an elementary lemma, Lemma 4.4. It says that any almost orthogonal matrix \(A\in {\mathbb {R}}^{N\times N}\) becomes exactly orthogonal after multiplication with some \(B\in {\mathbb {R}}^{N\times N}\) which is close to the identity. This result will be applied to \(A_{ij} := \nabla u_i(x) \cdot \nabla u_j(x)\) where \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) is a \(\delta \)-splitting map and \(x\in B_1(p)\) is a point where \(|{{\,\textrm{Hess}\,}}u(x)|\) is small in an appropriate sense. The matrix B provided by Lemma 4.4 will be used to define a new \(\delta \)-splitting map \(v:= B\circ u\) which is well normalized at x.

Lemma 4.4

For any \(\delta \le \delta _0(N)\) the following property holds. For any \(M\in {\mathbb {R}}^{N\times N}\) satisfying

there exists \(A\in {\mathbb {R}}^{N\times N}\) such that

Proof

It is enough to consider \(A^{-1}= \sqrt{ M\cdot M^t}\), which is well-defined because \(M\cdot M^t\) is symmetric, positive definite, and invertible provided \(\delta \le \delta (N)\).

Notice that the square root is C(N)-Lipschitz in a neighbourhood of the identity, hence

Analogously, the inversion is C(N)-Lipschitz in a neighbourhood of the identity, hence

\(\square \)

Proof of Proposition 4.3

First of all, since \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\) is a \(W^{1,2}\)-Sobolev map, then \({\mathscr {H}}^N\)-a.e. x is a Lebesgue point of \(\left|\nabla u_i\right|^2\), for all \(i=1,\ldots , N\) and for \(\nabla u_i\cdot \nabla u_j\) for any \(i,j=1,\dots ,N\). Without further comments, the sets \({\tilde{E}}\) and E constructed below will be assumed to be contained in such a set of full measure made of Lebesgue points of \(\nabla u_i\cdot \nabla u_j\).

Let us fix \(\delta <10^{-1}\) to be specified later in terms of \(\varepsilon \) and N. We set

A standard maximal function argument, along with the estimate

implies that \({\mathscr {H}}^N(B_1(p){\setminus } {{\tilde{E}}}) \le C(N)\delta \).

Let us fix \(x\in {{\tilde{E}}}\). The Poincaré inequality [VR08, R12] (cf. with the proof of [BNS22, Lemma 4.16]) gives

for any \(r<1/2\). A standard telescopic argument implies that x is a Lebesgue point for \({\mathcal {E}}\) (cf. [BPS21, Corollary 2.9 and Remark 2.10]) and

If \(\delta \le \delta (N)\) is small enough, we can apply Lemma 4.4 and find \(A_x\) satisfying (ii)(a). To verify (ii)(b), we observe that

Let us finally prove (iii). First of all, the same telescopic argument as above gives

We define E as the set of those \(x\in {{\tilde{E}}}\) satisfying the following properties:

-

$$\begin{aligned} \lim _{r\downarrow 0} \frac{{\mathscr {H}}^N(B_r(x) {\setminus } {{\tilde{E}}})}{{\mathscr {H}}^N(B_r(x))} = \lim _{r\downarrow 0} \frac{1}{r^{N}}\int _{B_r(x){\setminus } {{\tilde{E}}}} |{{\,\textrm{Hess}\,}}u|^2 \textrm{d}{\mathscr {H}}^N = 0 ; \end{aligned}$$(4.17)

-

there exist \(\alpha _{ij}^k\in {\mathbb {R}}\) such that

(4.18)

(4.18)for any \(i,j,k=1, \ldots ,N\).

Observe that

as a consequence of (4.18) and of the definition of \({{\tilde{E}}}\). Also, notice that \({\mathscr {H}}^N(E{\setminus } {{\tilde{E}}})=0\): it is obvious that (4.17) holds for \({\mathscr {H}}^N\)-a.e. \(x\in {{\tilde{E}}}\); regarding (4.18), we notice that it amounts to ask that x is a Lebesgue point of \({{\,\textrm{Hess}\,}}u(\nabla u_i,\nabla u_j)\) for any \(i,j=1, \ldots , N\). Indeed, multiplying with \(A_x\) does not change this property.

We now show (iii) for \(\alpha _{ij}^k\) defined as in (4.18). Fix \(x\in E\) and \(\eta \ll \delta \). Thanks to (4.17), we can find \(r_0=r_0(\eta )\le 1\) such that for any \(r<r_0\)

Moreover, thanks to (4.16) and (4.18) (up to taking a smaller \(r_0=r_0(\eta )\le 1\), and any \(r<r_0\)), there exists \(G_r\subset B_r(x)\cap {{\tilde{E}}}\) satisfying:

-

\(G_{r}\) has \(\eta \)-almost full measure in \(B_{r}(x)\), i.e.

$$\begin{aligned} {\mathscr {H}}^N(B_r(x){\setminus } G_r)\le \eta {\mathscr {H}}^N(B_r(x)); \end{aligned}$$(4.21) -

for any \(y\in G_r\) it holds

$$\begin{aligned} \sum _{i,j} |\nabla u_i^x(y) \cdot \nabla u_j^x(y) - \delta _{ij}| \le \eta \, \end{aligned}$$(4.22)and

$$\begin{aligned} \left|{{\,\textrm{Hess}\,}}u_k^x(y)(\nabla u_i^x(y), \nabla u_j^x(y)) + \alpha _{ij}^k\right| \le \eta , \end{aligned}$$(4.23)for any \(i,j,k=1, \ldots , N\).

In particular \(A_{ij}:=\nabla u_i^x(y)\cdot \nabla u_j^x(y)\) is invertible for any \(y\in G_r\).

Fix \(r<r_0\). We denote by \(L^2(TX)\) the \(L^\infty \)-module of velocity fields over X, and by \(L^2(TX\otimes TX)\) the \(L^\infty \)-module of 2-tensors. We refer the reader to [G18] for the relevant background and terminology.

The identification of \(L^2(TX)\) with the asymptotic GH-limits provided in [GP16], implies that the family \(\{ \nabla u^x_i \, : \, i=1, \ldots , N\} \subset L^2(TX)\) is independent on \(G_r\) (cf. [G18, Definition 1.4.1]). Using that \(L^2(TX)\) has dimension N, see [DPG18], we infer that

is a base of \(L^2(TX\otimes TX)\) on \(G_r\), according to [G18, Definition 1.4.3] (see also [BPS21, Lemma 2.1]). In particular, there exists a family of measurable functions \(\{f_{i,j}^k\}_{i,j,k}\) such that

see the discussion in [G18, Page 36].

Thanks to (4.25), (4.20), (4.22) and (4.23), we deduce the following pointwise inequalities in \(G_r\) for any \(i,j,k=1,\ldots , N\):

Using again (4.22) and (4.23), we deduce

which gives in turn

We finally observe that

where we used (4.21), the fact that \(|{{\,\textrm{Hess}\,}}u|^2 \le \delta \) in \({{\tilde{E}}}\), and (4.20).

By combining (4.26) and (4.27), we obtain

which implies the sought conclusion due to the arbitrariness of \(\eta \) and \(r\le r_0(\eta )\). \(\square \)

Given any point \(x\in E\) as in the statement of Proposition 4.3, up to the addition of a constant that does not affect the forthcoming statements we can assume that \(u^x(x)=0\in {\mathbb {R}}^N\). We introduce the function \(v:B_1(p)\rightarrow {\mathbb {R}}^N\) by setting

The point \(x\in E\) as in the statement of Proposition 4.3 will be fixed from now on, so there will be no risk of confusion.

Below we are concerned with the properties of the function v as in (4.29). Notice that, on a smooth Riemannian manifold, v would have vanishing Hessian at x and verify \(\nabla v_i(x)\cdot \nabla v_j(x)=\delta _{ij}\), by its very construction.

Lemma 4.5

Under the same assumptions and with the same notation introduced above, the map \(v:B_1(p)\rightarrow {\mathbb {R}}^N\) as in (4.29) has the following properties:

-

i)

for any \(i,j=1,\dots ,N\) it holds

(4.30)

(4.30) -

ii)

for any \(k=1,\dots , N\), it holds

(4.31)

(4.31)

Proof

Employing the standard calculus rules, let us compute the derivatives of v:

As \(u^x(x)=0\), \(\nabla u^x_i(x)\cdot \nabla u^x_j(x)=\delta _{ij}\) and (4.5) holds by construction, (4.32) shows that

and

Then we estimate

Integrating (4.34) over \(B_t(x)\) and using the uniform Lipschitz estimates for u

we obtain

By using (4.6) and Proposition 4.3 (ii)(b), we conclude that

\(\square \)

4.2 Volume estimates via almost splitting map with vanishing Hessian.

Given \(v:B_1(p)\rightarrow {\mathbb {R}}^N\) as in Lemma 4.5 we introduce the function \(r:B_1(p)\rightarrow [0,\infty )\) by

We aim at showing that r is a polynomially good approximation of the distance from x and, at the same time, it is an approximate solution of \(\Delta r^2=2N\), in integral sense. With some algebraic manipulations and the standard chain rules, we obtain the following.

Lemma 4.6

With the same notation as above the following hold:

-

i)

$$\begin{aligned} \Delta r^2=2\sum _i\left[ \left|\nabla v_i\right|^2+v_i\Delta v_i\right] ; \end{aligned}$$(4.36)

-

ii)

$$\begin{aligned} \left|\left|\nabla r\right|^2-1\right|\le \sum _{i,j}\left|\nabla v_i\cdot \nabla v_j-\delta _{ij}\right| . \end{aligned}$$(4.37)

Proof

The expression for the Laplacian (4.36) follows from the chain rule by the very definition \(r^2=\sum _iv_i^2\).

In order to obtain the gradient estimate, we compute

Hence

Then we split

Hence

eventually proving (4.37). \(\square \)

We will rely on the following technical result. Before stating it, recall that given any point x in an \({{\,\textrm{RCD}\,}}(K,N)\) space \((X,{\textsf{d}},{\mathfrak {m}})\), for \({\mathfrak {m}}\)-a.e. \(y\in X\) there is a unique geodesic connecting y to x (see for instance [GRS16, Corollary 1.4]).

Lemma 4.7

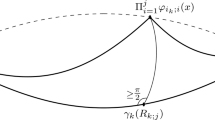

Let \((X,{\textsf{d}},{\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space. Let \(v>0\). There exists a constant \(C(N,v)>0\) such that for any \(x\in X\), if \({\mathscr {H}}^N(B_{3/2}(x){\setminus } B_1(x))>v\), then for any nonnegative function \(f\in L^{\infty }(B_1(x))\) and for almost every \(0<t<1\) it holds

where for \({\mathscr {H}}^N\)-a.e. \(y\in B_1(x)\) we denote by \(\gamma _y\) the unique minimizing geodesic between \(\gamma _y(0)=x\) and \(\gamma _y({\textsf{d}}(x,y))=y\).

Proof

Under the assumption that \({\mathscr {H}}^N(B_{3/2}(x){\setminus } B_1(x))>v\), the following inequalities hold, by Bishop-Gromov monotonicity for spheres [S06b, Theorem 2.3] (see also [KL16, Theorem 5.2]) and the coarea formula (3.10):

and

for any \(0<t<1\) and any \(0<\varepsilon <1/10\).

It is enough to check that for any nonnegative continuous function f on \(B_1(x)\) it holds

for a.e. \(0< s \le t\le 1\). Indeed, if (4.42) holds, then

where we used (4.39) and (4.40) for the last inequality.

Let us show (4.42). From the \({\textsf{MCP}}(-(N-1), N)\) property (which is satisfied by \({{\,\textrm{CD}\,}}(-(N-1),N)\) spaces and a fortiori for \({{\,\textrm{RCD}\,}}(-(N-1),N)\) spaces) and (4.41), we have

for any \(0< s \le t\le 1\). Passing to the limit as \(\varepsilon \downarrow 0\), taking into account the classical weak convergence of the normalized volume measure of the tubular neighbourhood to the surface measure for spheres, we obtain that (4.42) holds for a.e. \(0< s \le t \le 1\).

\(\square \)

Proposition 4.8

Under the same assumptions and with the same notation as above, the following asymptotic estimates hold for the function \(r:B_1(x)\rightarrow [0,\infty )\):

-

i)

(4.43)

(4.43) -

ii)

(4.44)

(4.44) -

iii)

(4.45)

(4.45)

Proof

We start noticing that a standard application of the Poincaré inequality from [VR08, R12], in combination with (4.30) and (4.31), shows that for any \(i,j=1,\dots ,N\) it holds

Given (4.46), (4.44) follows from (4.37).

In order to prove (4.45), we employ (4.36) to estimate

Hence

The first summand above can be dealt with via (4.46). In order to bound the second one we notice that

where we used that \(v_i\) is C(N)-Lipschitz with \(v_i(x)=0\) and we rely on the known identity

for \({{\,\textrm{RCD}\,}}(K,N)\) spaces \((X,{\textsf{d}},{\mathscr {H}}^N)\), see [Ha18, DPG18]. All in all this proves (4.45).

We are left to prove (4.43). In order to do so, we consider geodesics \(\gamma _y\) from x to y, where \(\gamma (0)=x\) and \(\gamma (t)=y\). This geodesic is unique for \({\mathscr {H}}^N\)-a.e. y, hence it is unique for \({\mathscr {H}}^{N-1}\)-a.e. \(y\in \partial B_t(x)\) for \({\mathscr {L}}^1\)-a.e. \(t>0\). Then we notice that for \({\mathscr {H}}^N\)-a.e. \(y\in B_1(x)\) (hence for \({\mathscr {H}}^{N-1}\)-a.e. \(y\in \partial B_t(x)\) for \({\mathscr {L}}^1\)-a.e. \(t>0\)) it holds

Now we can integrate on \(\partial B_t(x)\) and get

In order to deal with the last summand, we notice that, by Lemma 4.7,

The combination of (4.47) and (4.48) proves that

Notice that the lower volume bound \({\mathscr {H}}^N(B_{3/2}(x){\setminus } B_1(x))>C(N)\) is satisfied after rescaling \({\textsf{d}}\mapsto t^{-1} {\textsf{d}}\) by volume convergence, under the current assumptions. Taking into account (4.44), (4.49) shows that (4.43) holds. \(\square \)

4.3 Proof of Proposition 4.1.

The proof is divided into three steps. In the first step, we show that on each \(\delta \)-regular ball at least half of the points satisfy

Then, in Step 2, we bootstrap this conclusion to get (4.50) at \({\mathscr {H}}^N\)-a.e. x in a \(\delta \)-regular ball. We conclude in Step 3 by covering X with \(\delta \)-regular balls up to a \({\mathscr {H}}^N\)-negligible set.

Step 1. Let \(\delta = \delta (N,1/5)\) as in Proposition 4.3. We claim that for any \(\delta \)-regular ball \(B_{4r}(p)\subset X\) there exists \(E\subset B_r(p)\) such that

Indeed, we can apply Proposition 4.3 and find \(E\subset B_r(p)\) satisfying (4.514.52). Moreover, for any \(x\in E\) there exists a function \(v:B_r(x)\rightarrow {\mathbb {R}}^N\) that, up to scaling, has all the good properties guaranteed by Lemma 4.5.

Under these assumptions, we can apply (3.12) to the map r introduced in (4.35) in terms of v. Recalling Proposition 4.8 (see also (3.26) and (3.27) for the estimate for the negative part), (3.12) shows that

Step 2. Let \(\delta '\le \delta '(\delta , N)\) with the property that if \(B_{10}(p)\) is \(\delta '\)-regular then \(B_r(x)\) is \(\delta \)-regular for any \(x\in B_2(p)\) and \(r<5\). We prove that if \(B_{10}(p)\) is a \(\delta '\)-regular ball, then

is \({\mathscr {H}}^N\)-negligible for any \(\eta >0\).

Let us argue by contradiction. If this is not the case, we can find a Lebesgue point \(x\in A_\eta \). In particular, there exists \(r<1\) such that \({\mathscr {H}}^N(B_r(x)\cap A_\eta )>\frac{1}{5} {\mathscr {H}}^N(B_r(x))\). As \(B_r(x)\) is a \(\delta \)-regular ball, the latter inequality contradicts Step 1.

Step 3. We conclude the proof by observing that there is a family of \(\delta '\)-regular balls \(\{B_{10 r_i}(x_i)\}_{i\in {\mathbb {N}}}\) such that \({\mathscr {H}}^N(X{\setminus } \bigcup _i B_{r_i}(x_i))=0\). This conclusion follows by a Vitali covering argument after recalling that \({\mathscr {H}}^N\)-a.e. point in X has Euclidean tangent cone and taking into account the classical \(\varepsilon \)-regularity theorem for almost Euclidean balls, see [CC97, DPG18].

5 Control of the metric measure boundary

This section is devoted to the proof of the \(\varepsilon \)-regularity Theorem 2.1. The proof is divided into two main parts: an iteration lemma, where we establish uniform bounds for the deviation measures and vanishing of the metric measure boundary on an almost regular ball away from a set of small \((N-1)\)-dimensional content; the iterative application of the lemma to establish the bounds and the limiting behaviour on the full ball.

Lemma 5.1

(Iteration Lemma). For every \(\varepsilon >0\), if \(\delta \le \delta (N,\varepsilon )\) the following property holds. If \((X,{\textsf{d}},{\mathscr {H}}^N)\) is an \({{\,\textrm{RCD}\,}}(-\delta (N-1),N)\) space and \(B_{20}(p) \subset X\) is a \(\delta \)-regular ball, then there exists a Borel set \(E\subset B_2(p)\) with the following properties:

-

i)

\(|\mu _r| (E) \le \varepsilon \) for any \(0<r<1\);

-

ii)

\(B_1(p){\setminus } E\subset \bigcup _i B_{r_i}(x_i)\) and \(\sum _ir_i^{N-1}\le \varepsilon \);

-

iii)

\(\left|\mu _r\right|(E)\rightarrow 0\) as \(r\downarrow 0\).

We postpone the proof of the iteration lemma and see how to establish the \(\varepsilon \)-regularity theorem by taking it for granted.

5.1 Proof of Theorem 2.1 given Lemma 5.1.

Let us fix \(r\in (0,1)\), and \(\varepsilon \le 1/5\), \(\delta \le \delta (N,\varepsilon )\) as in Lemma 5.1. We assume that \(B_{10}(p)\) is \(\delta '\)-regular for some \(\delta '=\delta '(\delta ,N)>0\) small enough so that \(B_s(x)\) is \(\delta \)-regular for any \(x\in B_5(p)\) and \(s\le 5\).

We apply Lemma 5.1 to get a Borel set \(E_1\subset B_3(p)\) such that

-

(a)

\(|\mu _r|(E_1) \le \varepsilon \);

-

(b)

\(B_2(p){\setminus } E_1 \subset \bigcup _a B_{r_a}(x_a) \cup \bigcup _b B_{r_b}(x_b) \) with \(\sum _a r_a^{N-1} + \sum _b r_b^{N-1} \le \varepsilon \);

-

(c)

\(r_a \le r\) and \(r_b > r\).

We set

and observe that

By (b) we deduce the existence of a constant \(c(N)\ge 1\) such that

To control \(|\mu _r|(B_{r_b}(x_b))\), we apply again Lemma 5.1 to any ball \(B_{r_b}(x_b)\), after rescaling \({\textsf{d}}\mapsto r_b^{-1} {\textsf{d}}\). Arguing as above, we obtain a set \(G_{b}\) (constructed analogously to \(G_1\) as in (5.1)) such that

- (a’):

-

\(|\mu _r|(G_{b})\le c(N)\varepsilon r_b^{N-1}\);

- (b’):

-

\(B_{r_b}(x_b){\setminus } G_{b} \subset \bigcup _{b_1} B_{r_{b,b_1}}(x_{b,b_1})\) and \(\sum _{b_1} r_{b,b_1}^{N-1} \le \varepsilon r_b^{N-1}\);

- (c’):

-

\(r_{b,b_1} > r\).

After two steps of the iteration we are left with a good set

such that

as a consequence of (5.2), (a’) and (b). Moreover,

If the family of bad balls \(B_{r_{b,b_1}}(x_{b,b_1})\) is not empty, we iterate this procedure. At the k-th step, we have a good set \(G_k\) such that

and bad balls satisfying

Notice that \(r_{i,k} \le \varepsilon ^{\frac{k}{N-1}}\), hence this procedure must stop after M steps, for some \(M\le (N-1) \frac{\log r}{\log \varepsilon }\). Therefore, \(B_2(p)\subset G_M\) and

The proof of (2.2) is completed.

Let us now prove that \(|\mu _r|(B_1(p))\rightarrow 0\) as \(r\downarrow 0\). As a consequence of (5.3), we can extract a weak limit in \(B_2(p)\)

By the scale invariant version of (2.2), we deduce

To conclude the proof, it is enough to show that \(\mu (B_{1}(p))=0\). To this aim we apply an iterative argument analogous to the one above. Using the iteration Lemma 5.1, we cover

and observe that

where we used (5.4) and Lemma 5.1 (iii).

We apply the same decomposition to each ball

obtaining

After k steps of the iteration we deduce \(\mu (B_1(p)) \le \varepsilon ^{k+1}\). We conclude by letting \(k\rightarrow \infty \).

5.2 Proof of Lemma 5.1.

Let \(\delta '=\delta '(\varepsilon ,N)>0\) to be chosen later. If \(\delta \le \delta (\delta ',\varepsilon ,N)\), we can build a \(\delta '\)-splitting map \(u:B_{10}(p)\rightarrow {\mathbb {R}}^N\). We consider the set E of those points \(x\in B_1(p)\) such that

where \(\eta =\eta (N)\) will be chosen later. For any \(x\in E\) and \(r\le 1\), the Poincaré inequality gives

which along with a telescopic argument (cf. with the proof of [BNS22, Lemma 4.16] and with [CC00]) gives

We assume \(\eta =\eta (N)\) and \(\delta '=\delta '(\varepsilon ,N)\) small enough so that \(\delta ' + C(N)\eta ^{1/2}\le \delta _0(N)\), where the latter is given by Lemma 4.4. For any \(x\in E\), we apply Lemma 4.4 with \(A_{ij}= \nabla u_i(x)\cdot \nabla u_j(x)\) and we get a matrix \(B_x\in {\mathbb {R}}^{N\times N}\) such that \(|B_x|\le C(N)\) and \(v:= B_x \circ u: B_{10}(p)\rightarrow {\mathbb {R}}^N\) verifies

Since, by construction, any point \(x\in E\) is a Lebesgue point for \(\nabla u_i \cdot \nabla u_j\) (and thus for \(\nabla v_i \cdot \nabla v_j\)), it follows that

Moreover, it is clear that \(|{{\,\textrm{Hess}\,}}v|\le C(N) |{{\,\textrm{Hess}\,}}u|\).

Applying again a telescopic argument based on the Poincaré inequality we infer that

for any \(0<r<1\), where

is the maximal function of \(\left|{{\,\textrm{Hess}\,}}u\right|\).

Combining Corollary 3.8 with (5.5) gives

for \({\mathscr {H}}^N\)-a.e. \(x\in E\) and for any \(0<r<1\). In particular,

The classical \(L^2\) maximal function estimate gives

Therefore, \(M_{10}\left|{{\,\textrm{Hess}\,}}u\right|\) is integrable and dominates uniformly the sequence

on E. Moreover, \(f_r(x) \rightarrow 0\) as \(r\downarrow 0\) for \({\mathscr {H}}^N\)-a.e. \(x\in X\) by Proposition 4.1. Hence by the dominated convergence theorem

proving (iii).

In order to get the \((N-1)\)-dimensional content bound (ii), we employ a weighted maximal function argument (see for instance the proof of [BNS22, Proposition 4.19]) to show that \(B_1(p){\setminus } E\) can be covered by a countable union of balls \(\bigcup _iB_{r_i}(x_i)\) with

Indeed, for any \(x\in B_1(p)\) such that

we set \(r_x>0\) to be the maximal radius such that

By Ahlfors regularity of \({\mathscr {H}}^N\), we immediately deduce that

By a Vitali covering argument, we can cover \(B_1(p){\setminus } E\) with a countable union \(B_{r_i}(x_i)\) such that \(B_{r_i/5}(x_i)\) are disjoint. Then

This completes the proof of (i) and (ii) after choosing \(\delta '=\delta '(\varepsilon ,N)\) small enough so that the right hand sides in (5.7) and (5.9) are smaller than \(\varepsilon \).

6 Spaces with boundary

In this section we aim at controlling the metric measure boundary on \({{\,\textrm{RCD}\,}}(-(N-1),N)\) spaces \((X,{\textsf{d}},{\mathscr {H}}^N)\) with boundary satisfying fairly natural regularity assumptions.

Let \(0<\delta \le 1\) be fixed. We consider an \({{\,\textrm{RCD}\,}}(-\delta (N-1),N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\) with boundary and we recall that a \(\delta \)-boundary ball \(B_1(p)\subset X\), is a ball satisfying

We will assume that the following conditions are met:

-

(H1)

The doubling \(({{\hat{X}}}, {{\hat{{\textsf{d}}}}}, {\mathscr {H}}^N)\) of X obtained by gluing along the boundary is an \({{\,\textrm{RCD}\,}}(-\delta (N-1),N)\) space.

-

(H2)

A Laplacian comparison for the distance from the boundary holds:

$$\begin{aligned} \Delta {\textsf{d}}_{\partial X} \le - \delta (N-1) {\textsf{d}}_{\partial X} \qquad \text {on }X{\setminus } \partial X . \end{aligned}$$(6.2)

It is still unknown whether (H1) and (H2) hold true in the \({{\,\textrm{RCD}\,}}\) class. However, they are satisfied on Alexandrov spaces, see [Per91, AB03, P07], and noncollapsed GH-limits of manifolds with convex boundary and Ricci curvature bounded from below in the interior, see [BNS22].

We shall denote by

where \((0,s)\in {\mathbb {R}}^{N-1}\times {\mathbb {R}}_+\). Moreover, we set

Under the assumptions above, our main result is the following.

Theorem 6.1

Let \((X,{\textsf{d}},{\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space with boundary satisfying (H1) and (H2). Let \(p\in X\) and assume \({\mathscr {H}}^N(B_1(p))\ge v>0\). Then

Moreover,

where \(\gamma (N)>0\) is the constant defined in (6.4).

The proof of Theorem 6.1 is based on an \(\varepsilon \)-regularity theorem for the metric measure boundary on \(\delta \)-boundary balls, the \(\varepsilon \)-regularity Theorem 2.1 for regular balls and the boundary-interior decomposition Theorem 2.2. Below we state the \(\varepsilon \)-regularity theorem for boundary balls, and use it to complete the proof of Theorem 6.1. The rest of this section will be dedicated to the proof of the \(\varepsilon \)-regularity theorem.

Theorem 6.2

(\(\varepsilon \)-regularity on boundary balls). For any \(\varepsilon >0\) if \(\delta \le \delta (\varepsilon ,N)\) the following holds. For any \({{\,\textrm{RCD}\,}}(-\delta (N-1),N)\) space \((X,{\textsf{d}},{\mathscr {H}}^N)\) satisfying the assumptions (H1), (H2), if \(B_{10}(p)\subset X\) is a \(\delta \)-boundary ball, then

Moreover

Let us discuss how to complete the proof of Theorem 6.1, taking Theorem 6.2 for granted: The combination of Theorem 6.2, Theorem 2.1 and Theorem 2.2 implies that

where \(B_s(p)\) is any ball of an \({{\,\textrm{RCD}\,}}(-(N-1),N)\) space satisfying (H1) and (H2).

We let \(\mu \) be any weak limit of a sequence \(\mu _{r_i}\) with \(r_i\downarrow 0\). By Theorem 1.2, \(\mu \) is concentrated on \(\partial X\). Moreover, by (6.9),  , for some \(f\in L^1(\partial X, {\mathscr {H}}^{N-1})\). Indeed, \(\mu \) is absolutely continuous w.r.t.

, for some \(f\in L^1(\partial X, {\mathscr {H}}^{N-1})\). Indeed, \(\mu \) is absolutely continuous w.r.t.  , which is locally finite by [BNS22].

, which is locally finite by [BNS22].

In order to show that f is constant \({\mathscr {H}}^{N-1}\)-a.e., it is sufficient to apply a standard differentiation argument via blow up, as \(\partial X\) is \((N-1)\)-rectifiable by [BNS22].

Let us fix \(x\in {\mathcal {S}}^{N-1}{\setminus } {\mathcal {S}}^{N-2}\) and \(\varepsilon >0\). Given \(\delta =\delta (\varepsilon ,N)>0\) as in Theorem 6.2, we can find \(r_0\le 1\) such that \(B_r(x)\) is a \(\delta \)-boundary ball for any \(r\le r_0\) by [BNS22, Theorem 1.4]. Then, by (6.8) and scale invariance, it holds

Since \({\mathscr {H}}^{N-1}({\mathcal {S}}^{N-2})=0\), by the arbitrariness of \(\varepsilon >0\) and standard differentiation of measures, we deduce that

6.1 Proof of Theorem 6.2.

The proof is divided into several steps.

We begin by proving the uniform bound (6.7) following the strategy of [KLP21, Theorem 1.7]. The idea is that, in the doubling space \({{\hat{X}}}\), the double of the \(\delta \)-boundary ball \(B_2(p)\) is a \(\delta \)-regular ball; hence Theorem 1.2 provides a sharp control on \({{\hat{\mu }}}_r\), the boundary measure of \({{\hat{X}}}\). The key observation is that \({{\hat{\mu }}}_r = \mu _r\) in \(X{\setminus } B_{r}(\partial X)\) and \(\mu _r(B_r(\partial X))\) is easily controlled by means of the estimate on the tubular neighborhood of \(\partial X\) obtained in [BNS22].

In order to achieve (6.8), we need to sharpen the estimate on \(\mu _r(B_r(\partial X))\) when \(r\downarrow 0\). Here we use two ingredients:

-

(1)

The control of \(\delta \)-boundary balls at every scale and location obtained in [BNS22, Theorem 8.1];

-

(2)

the Laplacian comparison (H2).

The first ingredient says that any ball \(B_r(x)\subset B_2(p)\) is \(\delta \)-GH close to \(B_r((0,{\textsf{d}}_{\partial X}(x))) \subset {\mathbb {R}}_+^N\), hence the volume convergence theorem ensures that their volumes are comparable. Plugging this information in the definition of \(\mu _r(B_r(\partial X))\), it is easily seen that (6.8) follows provided we control the \({\mathscr {H}}^{N-1}\)-measure of the level sets \(\{{\textsf{d}}_{\partial X} = s\}\) in the limit \(s\downarrow 0\). Here is where (2) comes into play. Indeed, the Laplacian bound (6.2) provides an almost monotonicity of \({\mathscr {H}}^{N-1}(\{{\textsf{d}}_{\partial X} = s\})\) guaranteeing sharp controls and the existence of the limit.

6.1.1 Proof of (6.7).

For any \(r<10^{-10}\), we decompose

where \({{\hat{p}}}\in {{\hat{X}}}\) is the point corresponding to p in the doubling \({{\hat{X}}}\), and \(\partial X \subset {{\hat{X}}}\) denotes the image of \(\partial X\) through the isometric embedding \(X \rightarrow {{\hat{X}}}\). Observe that

where \({{\hat{\mu }}}_r\) denotes the metric measure boundary in \({{\hat{X}}}\). Recalling from (3.26) and (3.27) that the negative part of \({{\hat{\mu }}}_r\) is O(r), we deduce

In the last inequality above we used that \(B_{10}({{\hat{p}}})\) is a \(\delta \)-regular ball, since \(B_1(p)\) is a \(\delta \)-boundary ball, and Theorem 2.1. The tubular neighborhood estimate

proven in [BNS22, Theorem 1.4], implies that

that together with (6.12) gives (6.7).

6.1.2 Proof of (6.8).

For any compact set \(K\subset \partial X\) and \(r\ge 0\), we define

and notice that \(\Gamma _{r,K}=\bigcup _{0\le s\le r}\Sigma _{s,K}\).

We first claim that

provided \(B_1(p)\) is a \(\delta (\varepsilon ,N)\)-boundary ball. In view of (6.12) it is enough to check that

The elementary inclusion

yields

In order to estimate the latter, we use that

as \(r\downarrow 0\) (cf. Ingredient 1 in the proof of Lemma 6.3 below). For \({\mathscr {L}}^1\)-a.e. \(\eta <10^{-10}\), it holds

which implies

The last inequality follows from the continuity of the boundary measure w.r.t. the GH-convergence [BNS22, Theorem 8.8] by assuming \(\delta \le \delta (\varepsilon , N)\).

In virtue of (6.15), in order to conclude the proof of (6.8) it is enough to control \(\mu _r(\Gamma _{10r,{\overline{B}}_1(p)})\). To this aim we rely on the following.

Lemma 6.3

Let \((X,{\textsf{d}}, {\mathscr {H}}^N)\) be an \({{\,\textrm{RCD}\,}}(-\delta (N-1),N)\) space satisfying the conditions (H1) and (H2). Fix \(p\in X\) such that \(B_1(p)\) is a \(\delta \)-boundary ball. Then, setting

the following hold:

-

(a)

there exists a representative of f with \(f(0)={\mathscr {H}}^{N-1}({\overline{B}}_1(p)\cap \partial X)\) and satisfying

$$\begin{aligned} f(s_1)-f(s_2) \le C(N,\delta )(s_1 - s_2) , \qquad \text {for any } 0\le s_2\le s_1<2 ; \end{aligned}$$(6.19) -

(b)

$$\begin{aligned} {\mathscr {H}}^{N-1}(B_1(p)\cap \partial X)\le \lim _{s\downarrow 0} f(s) \le {\mathscr {H}}^{N-1}({\overline{B}}_1(p)\cap \partial X) . \end{aligned}$$(6.20)

Proof

Let us outline the strategy of the proof, avoiding technicalities. Given \(s_2\le s_1\) the almost monotonicity of \(f(s):= {\mathscr {H}}^{N-1}(\Sigma _{s,{\overline{B}}_1(p)})\) between \(s_2\) and \(s_1\) encoded in (6.19) will be obtained by applying the Gauss-Green theorem to the vector field \(\nabla {\textsf{d}}_{\partial X}\) in a rectangular region made of the gradient flow lines of \({\textsf{d}}_{\partial X}\) spanning the region between the horizontal faces \(\Sigma _{s_1,{\overline{B}}_1(p)}\), \(\Sigma _{s_2,{\overline{B}}_1(p)}\) parallel to \(\partial X\). The only boundary terms appearing will be \(f(s_1)\) and \(f(s_2)\), with opposite signs, as the lateral faces of the region have normal vector perpendicular to \(\nabla {\textsf{d}}_{\partial X}\). The sought (6.19) will follow, as the interior term in the Gauss-Green formula is almost nonpositive by the assumption (H2).

Several technical difficulties arise in the course of the proof. The most challenging one is the absence of a priori regularity for the rectangular region considered above, which is dealt with an approximation argument borrowed from [CaC93].

As the proof is very similar to those of [MS21, Prop. 6.14, Prop. 6.15], we will just list the main ingredients and briefly indicate how to combine them.

Ingredient 1. The measures

weakly converge to  as \(\varepsilon \downarrow 0\). Moreover, for \({\mathscr {L}}^1\)-a.e. \(s>0\), the sequence of measures

as \(\varepsilon \downarrow 0\). Moreover, for \({\mathscr {L}}^1\)-a.e. \(s>0\), the sequence of measures

weakly converges to  as \(\varepsilon \downarrow 0\).

as \(\varepsilon \downarrow 0\).

The first statement can be checked by arguing as in the proof of [MS21, Proposition 6.14] after considering one copy of X as a set of locally finite perimeter in the doubling space \({\hat{X}}\). If \(\sigma \) denotes any weak limit of \(\nu _{\varepsilon }\), the inequality  is satisfied without further conditions. The assumption (H2) enters into play in the proof of the opposite inequality. Notice that the local equi-boundedness of the family of measures \(\nu _{\varepsilon }\) follows from the tubular neighbourhood bounds in [BNS22, Theorem 1.4].

is satisfied without further conditions. The assumption (H2) enters into play in the proof of the opposite inequality. Notice that the local equi-boundedness of the family of measures \(\nu _{\varepsilon }\) follows from the tubular neighbourhood bounds in [BNS22, Theorem 1.4].

The convergence of (6.22) to  is a classical statement, see for instance [ADMG17, BCM21] for the weak convergence to the perimeter of \(\{{\textsf{d}}_{\partial X}\le s\}\) and [ABS19, BPS19] for the identification between perimeter and \((N-1)\)-dimensional Hausdorff measure.

is a classical statement, see for instance [ADMG17, BCM21] for the weak convergence to the perimeter of \(\{{\textsf{d}}_{\partial X}\le s\}\) and [ABS19, BPS19] for the identification between perimeter and \((N-1)\)-dimensional Hausdorff measure.

Ingredient 2. The Laplacian of \({\textsf{d}}_{\partial X}\) is a locally finite measure and

The same conclusion holds for \({\textsf{d}}_{\{{\textsf{d}}_{\partial X}\le s\}}\) for a.e. \(s>0\). Moreover, \(\Delta {\textsf{d}}_{\{{\textsf{d}}_{\partial X}\le s\}}=\Delta {\textsf{d}}_{\partial X}\) on the set \(\{{\textsf{d}}_{\partial X}>s\}\).

The first conclusion follows from [BNS22, Theorem 7.4], under the condition (H2). The second conclusion can be proven by employing the coarea formula (3.10) as in the proof of the convergence of (6.22) and the elementary identity

Proof of (a). The bound

can be obtained with the very same argument of the proof of [MS21, Proposition 6.15]. Indeed, the only ingredients that are required are the Laplacian upper bound for \({\textsf{d}}_{\partial X}\), which is guaranteed by (H2) in the present setting, the coincidence of  with the Minkowski content (Ingredient 1) and the identity

with the Minkowski content (Ingredient 1) and the identity  (Ingredient 2).

(Ingredient 2).

Analogously, we can prove that

for \({\mathscr {L}}^1\)-a.e. \(0<s_2<s_1<2\). Indeed, it is sufficient to choose those \(s_1,s_2\) such that the conclusions in Ingredient 1 and 2 are verified and it holds

Proof of (b). Given (a), it is easy to obtain (b). Indeed, the almost monotonicity (6.19), together with the condition \(f(0)={\mathscr {H}}^{N-1}({\overline{B}}_1(p)\cap \partial X)\) imply that the limit \(\lim _{s\downarrow 0}f(s)\) exists and

It remains to check that

In order to prove it, we fix any \(0<t<1\) and verify that

By Ingredient 1 and the coarea formula (3.10), it is easy to infer that

Thanks to (6.19), (6.26) yields (6.25). Taking the limit as \(t\uparrow 1\) at the right hand side of (6.25) gives (6.24). \(\square \)

We can now conclude the proof of Theorem 6.2.

The structure theorem for \(\delta \)-boundary balls [BNS22, Theorem 8.1], combined with the volume convergence [C97, CC97, DPG18], easily gives

provided \(\delta \le \delta (\varepsilon ,N)\) is small enough, where \(V_r\) was defined in (6.3).

Hence,

where we use the tubular neighbourhood bound from [BNS22, Theorem 1.4].

Employing the coarea formula (3.10) and noticing that \(1-V_r(t)=0\) for any \(t\ge r\), we can compute

When \(\delta \le \delta (\varepsilon ,N)\), [BNS22, Theorem 1.2] shows that

Thanks to Lemma 6.3 and the dominated convergence theorem, from (6.28) and (6.29) we deduce

Recalling that \(\gamma (N) = \omega _{N-1}\int _0^1(1-V_1(t))\textrm{d}t\), combining (6.15), (6.28) and (6.31), we conclude

hence completing the proof of (6.8).

References

S. Alexander and R.L. Bishop. \({\cal{F}}K\)-convex functions on metric spaces. manuscripta math. (1)110 (2003), 115–133.

L. Ambrosio, E. Brué, and D. Semola. Rigidity of the 1-Bakry-Émery inequality and sets of finite perimeter in \(\text{ RCD }\) spaces. Geom. Funct. Anal., (4)19 (2019) 949–1001

L. Ambrosio, S. Di Marino, and N. Gigli. Perimeter as relaxed Minkowski content in metric measure spaces. Nonlinear Anal., 153(2017), 78–88.

L. Ambrosio, N. Gigli, A. Mondino, and T. Rajala. Riemannian Ricci curvature lower bounds in metric measure spaces with -finite measure. Trans. Amer. Math. Soc., 367 (2015), 4661–4701.

L. Ambrosio, N. Gigli, and G. Savaré. Metric measure spaces with Riemannian Ricci curvature bounded from below. Duke Math. J., 163 (2014), 1405–1490.

L. Ambrosio, N. Gigli, and G. Savaré. Bakry-Émery curvature-dimension condition and Riemannian Ricci curvature bounds. Ann. Probab., 43 (2015), 339–404.

L. Ambrosio, A. Mondino, and G. Savaré. On the Bakry-Émery condition, the gradient estimates and the local-to-global property of \(\text{ RCD}^*(K,N)\) metric measure spaces. J. Geom. Anal., 26 (2014), 1–33.

L. Ambrosio, A. Mondino, and G. Savaré. Nonlinear diffusion equations and curvature conditions in metric measure spaces. Mem. Amer. Math. Soc., (1270)262 (2019), v+121 pp.

C. Brena, N. Gigli, S. Honda, and X. Zhu. Weakly non-collapsed \(\text{ RCD }\) spaces are strongly non-collapsed. Journal für die reine und angewandte Mathematik (Crelles Journal) (2022). https://doi.org/10.1515/crelle-2022-0071.

E. Bruè, A. Naber, and D. Semola. Boundary regularity and stability for spaces with Ricci bounded below. Invent. math. (2)228 (2022), 777–891.

E. Bruè, E. Pasqualetto, and D. Semola. Rectifiability of the reduced boundary for sets of finite perimeter over \(\text{ RCD }(K,N)\) spaces. J. Eur. Math. Soc., (2022) https://doi.org/10.4171/JEMS/1217.

E. Bruè, E. Pasqualetto, and D. Semola. Rectifiability of \(\text{ RCD }(K,N)\) spaces via \(\delta \)-splitting maps. Ann. Fenn. Math., 46 (2021), 465–482.

E. Bruè, E. Pasqualetto, and D. Semola. Constancy of the dimension in codimension one and locality of the unit normal on \(\text{ RCD }(K,N)\) spaces. accepted by Ann. Sc. Norm. Super. Pisa Cl. Sci., preprint arXiv:2109.12585v1.

V. Buffa, G. Comi, and M. Miranda. On BV functions and essentially bounded divergence measure fields in metric spaces. Rev. Mat. Iberoam., (3)38 (2022), 883–946.

Y. Burago, M. Gromov, and G. Perelman. A. D. Aleksandrov spaces with curvatures bounded below. Uspekhi Mat. Nauk, (2)47 (1992) (284), 3–51, 222; translation in Russian Math. Surveys (2)47 (1992), 1–58.

L. A. Caffarelli, and A. Córdoba. An elementary regularity theory of minimal surfaces. Differ. Integral Equ., (1)6(1993), 1–13.

F. Cavalletti, and E. Milman. The globalization theorem for the Curvature-Dimension condition. Invent. math., (1)226 (2021), 1–137.

J. Cheeger. Degeneration of Riemannian metrics under Ricci curvature bounds. Lezioni Fermiane. Scuola Normale Superiore, Pisa, 2001. ii+77 pp.

J. Cheeger, and T.-H. Colding. Lower bounds on Ricci curvature and the almost rigidity of warped products. Ann. of Math. (2), 144 (1996), 189–237.

J. Cheeger, and T.-H. Colding. On the structure of spaces with Ricci curvature bounded below. I. J. Differ. Geom., 46 (1997), 406–480.

J. Cheeger, and T.-H. Colding. On the structure of spaces with Ricci curvature bounded below. III. J. Differ. Geom., 54 (2000), 37–74.

J. Cheeger, W. Jiang, and A. Naber. Rectifiability of singular sets in noncollapsed spaces with Ricci curvature bounded below. Ann. of Math. (2), (2)193 (2021), 407–538.

J. Cheeger, and A. Naber. Regularity of Einstein manifolds and the codimension 4 conjecture. Ann. of Math. (2) (3)182 (2015), 1093–1165.

T.-H. Colding. Ricci curvature and volume convergence. Ann. of Math. (2), (3)145 (1997), 477–501.

G. De Philippis, and N. Gigli. From volume cone to metric cone in the nonsmooth setting. Geom. Funct. Anal., 26 (2016), 1526–1587.

G. De Philippis, and N. Gigli. Non-collapsed spaces with Ricci curvature bounded from below. J. Éc. polytech. Math., 5 (2018), 613–650.

M. Erbar, K. Kuwada, and K.-T. Sturm. On the equivalence of the entropic curvature-dimension condition and Bochner’s inequality on metric measure spaces. Invent. Math., 201 (2015), 993–1071.

N. Gigli. On the differential structure of metric measure spaces and applications. Mem. Amer. Math. Soc., 236 (2015), vi–91.

N. Gigli. Nonsmooth differential geometry: an approach tailored for spaces with Ricci curvature bounded from below. Mem. Amer. Math. Soc., 251 (2018), v–161.

N. Gigli, and E. Pasqualetto. Equivalence of two different notions of tangent bundle on rectifiable metric measure spaces. Comm. Anal. Geom. (1)30 (2022), 1–51.

N. Gigli, T. Rajala, and K.-T. Sturm. Optimal maps and exponentiation on finite-dimensional spaces with Ricci curvature bounded from below. J. Geom. Anal., (4)26 (2016), 2914–2929.

B.-X. Han. Ricci tensor on \(\text{ RCD}^*(K,N)\) spaces. J. Geom. Anal., (2)28 (2018), 1295–1314.

S. Honda. New differential operator and non collapsed \(\text{ RCD }\) spaces. Geom. Topol., (4)24 (2020), 2127–2148.

S. Honda, and Y. Peng. A note on topological stability theorem from RCD spaces to Riemannian manifolds. manuscripta math. (2022). https://doi.org/10.1007/s00229-022-01418-7.

W. Jiang, and A. Naber. \(L^2\) curvature bounds on manifolds with bounded Ricci curvature. Ann. of Math. (2), (1)193 (2021), 107–222.

V. Kapovitch, A. Lytchak, and A. Petrunin. Metric-measure boundary and geodesic flow on Alexandrov spaces. J. Eur. Math. Soc. (JEMS) (1)23 (2021), 29–62.

V. Kapovitch, and A. Mondino. On the topology and the boundary of \(N\)-dimensional \(\text{ RCD }(K,N)\) spaces. Geom. Topol., (1)25 (2021), 445–495.

nd Y. Kitabeppu, and S. Lakzian. Characterization of low dimensional \(\text{ RCD}^*(K,N)\) spaces. Anal. Geom. Metr. Spaces, (4)4 (2016), 187–215.

N. Li, and A. Naber. Quantitative estimates on the singular sets of Alexandrov spaces. Peking Math. J., (2)3 (2020), 203–234.

J. Lott, and C. Villani. Ricci curvature for metric-measure spaces via optimal transport. Ann. of Math. (2), 169 (2009), 903–991.

M. Miranda Jr. Functions of bounded variation on “good” metric spaces. J. Math. Pures Appl. (9), (8)82 (2003), 975–1004.

A. Mondino, and D. Semola. Weak Laplacian bounds and minimal boundaries in non-smooth spaces with Ricci curvature lower bounds. Preprint arXiv:2107.12344v2, Mem. Amer. Math. Soc. (in press).

G. Perelman. A.D. Alexandrov’s spaces with curvatures bounded from below, II. Preprint available at http://www.math.psu.edu/petrunin/papers/alexandrov/perelmanASWCBFB2+.pdf.

G. Perelman. DC structure on Alexandrov space with curvature bounded from below. Preprint available at https://anton-petrunin.github.io/papers/alexandrov/Cstructure.pdf.

G. Perelman, and A. Petrunin. Quasigeodesics and gradient curves on Alexandrov spaces (1996). Preprint available at https://anton-petrunin.github.io/papers.html.

A. Petrunin. Semiconcave functions in Alexandrov’s geometry. Surveys in differential geometry. Vol. XI, 137–201, Surv. Differ. Geom., 11, Int. Press, Somerville, MA, 2007.

A. Petrunin. Alexandrov meets Lott-Villani-Sturm. Münster J. Math., 4 (2011), 53–64.

T. Rajala. Local Poincaré inequalities from stable curvature conditions on metric spaces. Calc. Var. Partial Differ. Equ., (3–4)44 (2012), 477–494.

G. Savaré. Self-improvement of the Bakry-Émery condition and Wasserstein contraction of the heat flow in \(\text{ RCD }(K,\infty )\) metric measure spaces. Discrete Contin. Dyn. Syst. (4)34 (2014), 1641–1661.

K.-T. Sturm. On the geometry of metric measure spaces I. Acta Math., 196 (2006), 65–131.

K.-T. Sturm. On the geometry of metric measure spaces II. Acta Math., 196 (2006), 133–177.

M.-K. Von Renesse. On local Poincaré via transportation. Math. Z., 259 (2008), 21–31.

H.-C. Zhang, and X.-P. Zhu. Ricci curvature on Alexandrov spaces and rigidity theorems. Comm. Anal. Geom., (3)18 (2010), 503–553.

Acknowledgements

The first named author is supported by the Giorgio and Elena Petronio Fellowship at the Institute for Advanced Study. The second and the last named authors are supported by the European Research Council (ERC), under the European Union Horizon 2020 research and innovation programme, via the ERC Starting Grant “CURVATURE”, grant agreement No. 802689. The first and third author are grateful to Aaron Naber for inspiring conversations around the topics of the present work. The last author is grateful to Alexander Lytchak for inspiring correspondence about the topics of the present work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bruè, E., Mondino, A. & Semola, D. The metric measure boundary of spaces with Ricci curvature bounded below. Geom. Funct. Anal. 33, 593–636 (2023). https://doi.org/10.1007/s00039-023-00626-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00039-023-00626-x

for any

for any