Abstract

Increasing the sensitivity of image sensors is a major challenge for current imaging technology. Researchers are tackling it because highly sensitive sensors enable objects to be recognized even in dark environments, which is critical for today’s smartphones, wearable devices, and automobiles. Unfortunately, conventional image-sensor architectures use light-absorptive color filters on every pixel, which fundamentally limits the detected light power per pixel. Recent advances in optical metasurfaces have led to the creation of pixelated light-transmissive color splitters with the potential to enhance sensor sensitivity. These metasurfaces can be used instead of color filters to distinguish primary colors, and unlike color filters, they can direct almost all of the incident light to the photodetectors, thereby maximizing the detectable light power. This review focuses on such metasurface-based color splitters enabling high-sensitivity color-image sensors. Their underlying principles are introduced with a focus on dispersion engineering. Then, their capabilities as optical elements are assessed on the basis of our recent findings. Finally, it is discussed how they can be used to create high-sensitivity color-image sensors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image sensors are the eyes of machines and are found in various everyday items, such as smartphones, wearable electronics, and automobiles. Some of these applications require sensors to capture images even in dark environments (Fig. 1a), and over the past several decades, industry and academia have made continuous efforts to enhance their sensitivity. A typical success story is the back-illuminated sensor developed in the late 2000s, which doubled the light power detected per sensor pixel compared with previous ones [1, 2]. In recent years, however, miniaturization of sensor pixels has been pushed to the sub-micrometer scale to meet the demands for higher resolution and functionality in smartphone cameras [3, 4], and the light-detecting power per sensor pixel has accordingly decreased due to the scale law [2, 5,6,7,8] (Fig. 1b). Therefore, we are faced with a major challenge: how do we increase the light-detection capacity of such tiny pixels?

A promising way to tackle this challenge is the creation of color-splitter-based image sensors that remove the need for light-absorptive color filters. As is common practice with today’s image sensors, red (R), green (G), and blue (B) color filters are integrated on each sensor pixel in order to extract primary color information from a scene (Fig. 2a). Each color filter transmits only light in the wavelength band corresponding to its color and absorbs light in other wavelength bands. As a result, much of the incident light does not reach the photodetector, which is an obvious barrier to improving sensitivity. In contrast, the use of color splitters would allow color information to be captured while directing all of the incident light to the photodetectors, thereby maximizing the detectable light power (Fig. 2b). This concept had been proposed previously [9], in which a trichroic Bragg grating represented with a multi-layered structure was to be used for color splitting, but its application to image sensors proved very challenging because of the large scale gap between such classical color-dispersive optics and current sensor pixels. Although several miniature color splitters have been developed in recent years by taking advantage of rapid advances in nanotechnology, they are difficult to apply directly to current image sensors because of their low efficiency [10, 11], strong polarization dependence [12], or need to fabricate three-dimensional nanostructures [13, 14]. As such, the creation of color-splitter-based image sensors remains challenging despite their great potential.

To fill this gap, we are working to incorporate the concept of optical metasurfaces into image sensor technology. Optical metasurfaces are artificial surfaces consisting of numerous tiny optical resonators or waveguides (meta-atoms) arranged at intervals shorter than the wavelength of light [15,16,17,18,19,20,21,22]. By appropriately designing a pattern of meta-atoms, one can manipulate the intensity, phase, polarization state, and wavelength dispersion of light incident on the surface with a high degree of freedom. Optical metasurfaces with designed wavelength dispersions are referred to as dispersion-engineered metasurfaces; they are the basis for single-layer achromatic metasurface lenses (metalenses), which have recently attracted significant attention in the field of optics [23,24,25,26,27]. We have extended the concept of dispersion-engineered metasurfaces and have developed a metasurface-based color splitter that splits incident white (W) light into R, G, and B light on high-density pixels [28, 29]. Additionally, we have created a full-color-sorting metalens that has both RGB-color-splitting and light-focusing functions [30, 31]. This allows a pixelated color splitter to have a substantial incident-angle tolerance and sub-micrometer pixel adaptability, which are key features of state-of-the-art image sensors. The metalens consists of a binary pattern of a single material that is highly compatible with the complementary metal–oxide–semiconductor (CMOS) process, which is currently the most common form of image-sensor fabrication technology. We have verified the sensitivity and image quality of a filter-free sensor architecture with our metalenses through simulations and experiments and have shown it dramatically boosts the detected light power without sacrificing image quality [31]. These demonstrations highlight the potential of our approach to dramatically improve the sensitivity of image-sensor technology and that it could have an impact on the huge image-sensor industry. This review focuses on the principles and performance of these metasurface-based color splitters and discusses their potential in image-sensor technology.

2 Wavefront shaping with dispersion-engineered metasurfaces

To split light into different colors on the spatial scale of a pixel, the wavefront of the light must be controlled for each color. This generally requires materials or structures that provide different optical phase gradients for each color. Such wavefront shaping is practically impossible with natural materials because the wavelength dispersion of the refractive index, which determines the phase, is unique to each material. It is also difficult to fabricate prism-like shapes at the scale of the sensor’s pixels. Similarly, color splitting using diffraction gratings requires an element size and optical path length equivalent to several wavelengths, which is much larger than the scale of sensor pixels.

In contrast, dispersion-engineered metasurfaces can be used to shape the wavefront for each color. Wavefront shaping is generally performed by densely arranging optically independent meta-atoms on a substrate [16]. Typically, the meta-atoms are optical resonators or waveguides with sizes smaller than the wavelength. By placing meta-atoms of various shapes, each with a specifically designed light transmission coefficient (amplitude and phase), at points on the substrate, the incident light is modulated at each point and consequently changed to have the desired wavefront (Fig. 3a). Note that the spatial modulation used in wavefront shaping can be of the amplitude or the phase of the light, but phase-only modulation is the most used because of its high transmission efficiency. Dispersion-engineered metasurfaces extend this basic idea across the wavelength dimension and independently control the wavefront for each wavelength [23,24,25,26,27]. To perform spatial phase-modulation independently for each wavelength with meta-atoms, each meta-atom must be designed to have the desired phases at all designed wavelengths at the same time. To meet this requirement, meta-atoms with diverse and complex shapes are used because the degree of phase dispersion (phase as a function of wavelength) of optical resonators and waveguides is strongly dependent on their cross-sectional shape.

Wavefront shaping with dispersion-engineered metasurfaces. a Concept of optical wavefront shaping with optical metasurfaces. b Schematic diagrams of meta-atoms used for dispersion engineering. The meta-atoms are composed of dielectric nanoposts with several archetypical cross sections. The nanoposts are made of silicon nitride (SiN), and their height and period are fixed at 1250 nm and 400 nm, respectively. c Simulated phase values for various structural parameters of the meta-atoms at wavelengths of 430, 520, and 635 nm. Panels b and c. Adapted with permission from [Reprinted/Adapted] with permission from [ref 31]

Typical meta-atoms for dispersion-engineered metasurfaces are shown in Fig. 3b. In particular, nanopost waveguide structures with diverse and complex cross-sectional shapes are often used. Figure 3c shows a simulated phase-dispersion map that displays simulated phase values at three wavelengths (430, 520, and 635 nm) for these structures. The nanoposts made of silicon nitride (SiN), and their height and period are 1250 nm and 400 nm, respectively. The simulation was performed using a rigorous coupled-wave analysis technique. This map clearly shows that a wide range of the phase dispersion space is covered by the variously shaped nanoposts. It is also evident that the phase dispersion is wider than that of a waveguide made from only square nanoposts (the conventional choice for the dielectric meta-atoms of optical metasurfaces). In addition, these meta-atoms work in a polarization-independent manner due to their four-fold rotational symmetry [25, 26]. Therefore, such meta-atoms can be used to make polarization-independent wavefront shaping designs for each wavelength.

The concept of dispersion-engineered metasurfaces first emerged with the creation of “achromatic” metasurfaces [23,24,25,26] that correct color dispersion. In contrast, we have used it here to create “chromatic” metasurfaces that enhance color dispersion. This change in conception has allowed us to realize a new optical element that splits colors at the pixel scale.

3 Metasurface-based pixelated color splitters

A schematic diagram of a color splitter designed with dispersion-engineered metasurfaces [28] is shown in Fig. 4a. The splitter consists of two types of SiN nanopost with different widths that are highly compatible with the CMOS process. The nanoposts provide a phase gradient with a different slope for each RGB wavelength of incident light. This phase gradient causes the light transmitted through the splitter to bend in different directions depending on the color. Figure 4b shows scanning electron microscope (SEM) images of the fabricated color splitter and the intensity profile of light transmitted through it. The light bends in different directions depending on the wavelength, indicating that spatial color splitting is achieved at the pixel scale. There is also no dependence on the polarization of the incident light, so the color splitting works on unpolarized natural light. Figure 4c shows a schematic diagram of an image sensor using this color splitter. Since almost all of the incident light is directed to the pixel's photodetector, the power of the detected light can be enhanced by a factor of about three compared with conventional filter-based sensors. In this sensor architecture, color mixing occurs in some of the pixels. Therefore, signal processing must be used to correct the colors for creating an RGB color image. Examples of the signal processing include a conversion matrix method and an inverse problem method [28]. The first one is based on a pre-designed matrix that directly converts the detected signals into RGB signals with minimum errors. The second one is based on solving an inverse problem for estimating the incident light spectrum from the detected signals. The estimated spectrum is then used to calculate the RGB signals.

Pixel-scale color splitter based on optical metasurfaces. a Cross-sectional schematic diagram of the designed color splitter. b Measured light intensity profiles of the light transmitted through the splitter at wavelengths of 430, 520, and 635 nm. c Schematic diagram of a color image sensor using the splitters. Adapted with permission from Ref. 28.

Figure 5 shows a color palette image captured using the sensor, which was virtually constructed with an optical microscope. The color image was generated from a monochrome raw image of the sensor through the inverse problem method. The pixel size corresponds to 1.43 μm, and the total number of pixels is only 132 × 132 because of the limited field of view of the microscope. The color image shows sufficient chromaticity and spatial resolution that are consistent with the reference image captured with a conventional filter-based image sensor. Furthermore, the total light power detected by the sensor is 2.92 times that of the filter-based one. This enhancement in light-detection capacity is expected to increase the sensor's resistance to sensor noise and enable acquisition of higher quality images under noise-dominant imaging conditions, such as in dark environments. In fact, imaging simulations have verified that the image quality (peak signal-to-noise ratio: PSNR) of the sensor is higher than that of a conventional sensor under noise-dominant conditions. As such, the above results show that a high-sensitivity color image sensor could be created by employing color splitters instead of color filters. Furthermore, with more advanced signal processing it would be possible to reconstruct not only the RGB colors, but also the wavelength spectrum [29], so the color-splitter-based sensor could also be operated as a simple spectral image sensor.

Color image captured with an image sensor using metasurface-based color splitters. a Raw image captured using the proposed sensor. b Color image reconstructed from the raw image. c Color image captured using a conventional filter-based image sensor. Adapted with permission from Ref. 28.

While the above-described sensor offers the benefit of a significant increase in the amount of detected light, it has low tolerance to light incident at an angle. This is because the angle of light transmitted through the color splitter varies depending on the angle of incidence, making it difficult to receive obliquely incident light at the desired pixel. In general, light arriving on an image sensor contains an oblique-incidence component due to the imaging lens (the angle range is determined by the f-number of the lens), so improving the tolerance to oblique incidence is a major challenge in applying color splitters to practical image sensors. Furthermore, color mixing with white light occurs in some of the pixels, so it becomes necessary to perform color corrections by using special signal processing. This special processing not only increases the signal processing costs; it is inconsistent with the signal processing developed for conventional color-image sensors. To solve these problems, the metasurface’s design can be enhanced to create full-color-sorting metalenses that simultaneously act as efficient on-chip color splitters and lenses.

4 Full-color-sorting metalenses

The color splitter described above clearly performs RGB color splitting on the pixel scale, but its function is sensitive to oblique light incidence. Therefore, it is difficult to use sensors with such color splitters in combination with bright imaging optics with low f-numbers. Here, conventional image sensors with color filters use the light-focusing function of microlenses integrated on each filter to improve the oblique incidence characteristics (see Fig. 2a). Thus, adding a similar function to a splitter-based image sensor should be effective in improving its oblique incidence characteristics.

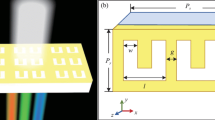

On the basis on this idea, we recently demonstrated a full-color-sorting metalens that integrates both color-splitting and light-focusing functions into a single-layer CMOS-compatible structure [30, 31]. This metalens can replace the color filters and microlenses of conventional sensors (see Fig. 2a). Therefore, we believed that it would dramatically increase the light-detection capacity of the pixels, reduce the number of optical elements required, and provide resistance to oblique incidence. Figure 6 shows a schematic diagram of the functions of the full-color-sorting metalens. The metalens focuses all incident light to the corresponding pixel center while spatially separating it in accordance with the color. Such a complex optical function can be achieved by employing dispersion-enriched meta-atoms (Fig. 3b and 3c). In particular, this metalens is compatible with a Bayer pixel array [32], a pixel array widely used in conventional image sensors, and thereby it can take advantage of the existing signal processing without modification.

Figure 7a shows an SEM image of the fabricated metalens array (pixel size: 1.6 μm), and Fig. 7b shows the light intensity profile on the lens focal plane when white light from a halogen lamp is vertically incident on it. Clear color splitting and light focusing is observed. Figure 7c shows the spectral responses of the four color pixels, which were obtained from the measured profiles by integrating the intensity over each pixel area for each wavelength. Figure 7d shows the corresponding simulated results. The spectra have peaks in the wavelength range corresponding to the RGB bands, and the peaks for R and G well exceed the upper limit of conventional filter-based image sensors (see the black dashed line). The average transmittance over the entire visible wavelengths range was ~ 65% in the experiment and ~ 77% in the simulation, showing sufficiently high light transmittance (cf. ~ 34% for conventional filter-based image sensors [33, 34]). To verify the oblique incidence tolerance of the metalenses, the spectral responses on each pixel were measured by varying the angular distribution of the incident light, defined by the numerical aperture (NA) of the incidence optics. This experiment demonstrated that the color-splitting performance is preserved for NA values up to 0.20 (equivalent to an incident angle of 10.6 degrees and an f-number of 2.5), which is a significant improvement over that (< NA = ~ 0.07) of the previous color splitters without a light-focusing function.

Characterization of full-color-sorting metalenses. a Scanning electron microscopy (SEM) image of the fabricated metalens array (pixel size: 1.6 μm). b Light intensity profiles on the metalens’s focal plane under halogen lamp illumination. c Measured spectral responses of color pixels. d Simulated spectral responses of color pixels. Adapted with permission from Ref. 31.

To demonstrate the impact of incorporating metalenses into image sensors, a metalens-integrated sensor was virtually constructed using an optical microscope and its imaging performance was evaluated. Figure 8a shows raw images of color objects captured by the virtual sensor. The pixel size of the sensor corresponded to 1.6 μm, and the total number of pixels was 116 × 116 due to the limited field of view of the microscope. A lens with an NA of 0.10 (equivalent to an f-number of 5) was used for imaging. Color images generated from these raw images by using conventional signal processing (demosaicing [35], color correction [36], and white balance correction [37]) are shown in Fig. 8b. For reference, color images captured under the same conditions by using a virtually constructed conventional filter-based image sensor are shown in Fig. 8c. Taken together, these images show that the sensor had comparable image quality and resolution to those of the conventional sensor. In addition, the sensor had a 2.83 times higher signal level on average compared with the conventional sensor.

Demonstration of an image sensor using full-color-sorting metalenses. a Raw images captured using the proposed sensor. b Color images generated from the raw images. c Color image captured using a conventional filter-based image sensor. Adapted with permission from Ref. 31.

To verify the suitability of this approach for current image sensors, full-color-sorting metalenses were designed for integration with a sensor chip and their performance was investigated. In this design, the pixel size was set to 0.84 μm, a scale similar to the smallest pixel size (0.7 ~ 0.8 μm) in currently available sensors. Figure 9a shows a schematic diagram of the designed unit metalens. Figure 9b shows the spectral responses of the color pixels with this design. All the spectral peaks were well above the upper limit of conventional filter-based image sensors (see the black dashed line). The predicted light-detection capacity was 2.9 times higher than that of filter-based image sensors. A numerical analysis also showed that this sensor architecture is resistant to oblique incidence corresponding to an f-number of about 1.76 (NA = 0.28), which is equivalent to the f-numbers of current smartphone cameras (1.6 ~ 2.8). Remarkably, an imaging simulation using the above light-detection ability showed that the sensor had significantly improved image quality compared with that of the conventional sensor under noise-dominant conditions (assuming a dark environment). In other words, it can significantly reduce the effect of sensor noise, which is a significant advantage when imaging objects in dark conditions or at very high speeds. These results demonstrate that the sensor can be employed in a variety of imaging systems, including smartphone cameras, and can perform high-sensitivity color imaging without sacrificing image quality or resolution.

5 Conclusion

This paper outlined pixelated color splitters that leverage recent advances in optical metasurfaces. Implementing such metasurface-based color splitters in an image sensor can overcome the long-standing barrier to increasing sensor sensitivity. Furthermore, the reported splitters have high compatibility with current image sensor technology in terms of both manufacture and systems. This will be a significant advantage in smoothly transitioning this concept to industrial applications. In fact, a prototype of an image sensor with full-color-sorting metalenses directly attached was recently built and tested in a way that clearly demonstrated its superior sensitivity [38]. Image sensors in which both metasurface-based color splitters and color filters are integrated monolithically have also been reported by an image sensor manufacturer [39]. Image processing specific to splitter-based sensors has been proposed as well [40]. These developments will accelerate research and development on metasurface-based image sensors for practical applications and will have a significant impact on the image-sensor industry in the near future.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Iwabuchi, S., Maruyama, Y., Ohgishi, Y., Muramatsu, M., Karasawa, N., Hirayama, T.: A back-illuminated high-sensitivity small-pixel color CMOS image sensor with flexible layout of metal wiring. IEEE International Solid State Circuits Conference (ISSCC), 1171–1178 (2006)

Wakabayashi, H., Yamaguchi, K., Okano, M., Kuramochi, S., Kumagai, O., Sakane, S., Ito, M., Hatano, M., Kikuchi, M., Yamagata, Y., Shikanai, T., Koseki, K., Mabuchi, K., Maruyama, Y., Akiyama, K., Miyata, E., Honda, T., Ohashi, M., Nomoto, T.: A 1/2.3-inch 10.3 Mpixel 50 frame/s backilluminated CMOS image sensor. IEEE International Solid State Circuits Conference (ISSCC), 410–411 (2010)

Okawa, T., Ooki, S., Yamajo, H., Kawada, M., Tachi, M., Goi, K., Yamasaki, T., Iwashita, H., Nakamizo, M., Ogasahara, T., Kitano, Y., Tatani, K.: A 1/2inch 48M all PDAF CMOS image sensor using 0.8 µm Quad Bayer coding 2× 2OCL with 1.0 lux minimum AF illuminance level. IEEE International Electron Devices Meeting (IEDM), 16.3.1–16.3.4 (2019)

Park, D., Lee, S.-W., Han, J., Jang, D., Kwon, H., Cha, S., Kim, M., Lee, H., Suh, S., Joo, W., Lee, Y., Nah, S., Jeong, H., Kim, B., Jung, S., Lee, J., Kim, Y., Moon, C.-R., Park, Y.: A 0.8 µm smart dual conversion gain pixel for 64 Megapixels CMOS image sensor with 12 k e-full-well capacitance and low dark noise,” IEEE International Electron Devices Meeting (IEDM) 16.2.1–16.2.4 (2019)

Kitamura, K., Watabe, T., Sawamoto, T., Kosugi, T., Akahori, T., Iida, T., Isobe, K., Watanabe, T., Shimamoto, H., Ohtake, H., Aoyama, S., Kawahito, S., Egami, N.: A 33-megapixel 120-frames-per-second 2.5-watt CMOS image sensor with column-parallel two-stage cyclic analog-to-digital converters. IEEE Trans. Electron Devices 59, 3426 (2012)

Toyama, T., Mishina, K., Tsuchiya, H., Ichikawa, T., Iwaki, H., Gendai, Y., Murakami, H., Takamiya, K., Shiroshita, H., Muramatsu, Y., Furusawa, T.: A 17.7 Mpixel 120fps CMOS image sensor with 34.8 Gb/s readout. IEEE International Solid-State Circuits Conference (ISSCC), 420–422 (2011)

Seo, M.W., Suh, S.H., Iida, T., Takasawa, T., Isobe, K., Watanabe, T., Itoh, S., Yasutomo, K.: Kawahito, S: A low-noise high intrascene dynamic range CMOS image sensor with a 13 to 19b variable-resolution column-parallel folding-integration/cyclic ADC. IEEE J. Solid-State Circuits 47, 272–283 (2012)

Yamashita, Y., Takahashi, H., Kikuchi, S., Ota, K., Fujita, M., Hirayama, S., Kanou, T., Hashimoto, S., Momma, G., Inoue, S.: A 300mm wafer-size CMOS image sensor with in-pixel voltage-gain amplifier and column-level differential readout circuitry. IEEE International Solid-State Circuits Conference (ISSCC), 408–410 (2011)

Suzuki, Y., Ozeki, Y., Yoshino, T., Yamada, K., Yamagata, M., Tanaka, Y., Itoh, K.: Proposal of micro-trichroic structures for high-sensitivity color image sensors. Jpn. J. Appl. Phys. 50, 042201 (2011)

Nishiwaki, S., Nakamura, T., Hiramoto, M., Fujii, T., Suzuki, M.: Efficient colour splitters for high-pixel-density image sensors. Nature Photon. 7, 240–246 (2013)

Wang, P., Menon, R.: Ultra-high-sensitivity color imaging via a transparent diffractive-filter array and computational optics. Optica 2, 933–939 (2015)

Chen, B.H., Wu, P.C., Su, V.C., Lai, Y.C., Chu, C.H., Lee, I.C., Chen, J.-W., Chen, Y.H., Lan, Y,-C., Kuan, C.-H., Tsai, D. P,: GaN metalens for pixel-level full-color routing at visible light. Nano Lett. 17, 6345–6352 (2017)

Camayd-Muñoz, P., Ballew, C., Roberts, G., Faraon, A.: Multifunctional volumetric meta-optics for color and polarization image sensors. Optica 7, 280–283 (2020)

Johlin, E.: Nanophotonic color splitters for high-efficiency imaging. Iscience 24, 102268 (2021)

Yu, N., Capasso, F.: Flat optics with designer metasurfaces. Nat. Mater. 13, 139–150 (2014)

Kamali, S.M., Arbabi, E., Arbabi, A., Faraon, A.: A review of dielectric optical metasurfaces for wavefront control. Nanophotonics 7, 1041–1068 (2018)

Lalanne, P., Astilean, S., Chavel, P., Cambril, E., Launois, H.: Blazed binary subwavelength gratings with efficiencies larger than those of conventional échelette gratings. Opt. Lett. 23, 1081–1083 (1998)

Lin, D., Fan, P., Hasman, E., Brongersma, M.L.: Dielectric gradient metasurface optical elements. Science 345, 298–302 (2014)

Arbabi, A., Horie, Y., Ball, A.J., Bagheri, M., Faraon, A.: Subwavelength-thick lenses with high numerical apertures and large efficiency based on high-contrast transmitarrays. Nat. Commun. 6, 7069 (2015)

Arbabi, A., Horie, Y., Bagheri, M., Faraon, A.: Dielectric metasurfaces for complete control of phase and polarization with subwavelength spatial resolution and high transmission. Nat. Nanotechnol. 10, 937–943 (2015)

Miyata, M., Nakajima, M., Hashimoto, T.: Impedance-matched dielectric metasurfaces for non-discrete wavefront engineering. J. Appl. Phys. 125, 103106 (2019)

Miyata, M., Nakajima, M., Hashimoto, T.: Compound-eye metasurface optics enabling a high-sensitivity, ultra-thin polarization camera. Opt. Express 28, 9996–10014 (2020)

Chen, W.T., Zhu, A.Y., Sanjeev, V., Khorasaninejad, M., Shi, Z., Lee, E., Capasso, F.: A broadband achromatic metalens for focusing and imaging in the visible. Nat. Nanotechnol. 13, 220–226 (2018)

Wang, S., Wu, P.C., Su, V.C., Lai, Y.C., Chen, M.K., Kuo, H.Y., Chen, B.H., Chen, Y.H., Huang, T.-T., Wang, J.-H., Lin, R.-M., Kuan, C.-H., Li, T., Wang, Z., Zhu, S., Tsai, D.P.: A broadband achromatic metalens in the visible. Nat. Nanotechnol. 13, 227–232 (2018)

Shrestha, S., Overvig, A.C., Lu, M., Stein, A., Yu, N.: Broadband achromatic dielectric metalenses. Light Sci. Appl. 7, 85 (2018)

Ndao, A., Hsu, L., Ha, J., Park, J.-H., Chang-Hasnain, C., Kanté, B.: Octave bandwidth photonic fishnet-achromatic-metalens. Nat. Commun. 11, 3205 (2020)

Chen, W.T., Park, J.-S., Marchioni, J., Millay, S., Yousef, K.M.A., Capasso, F.: Dispersion-engineered metasurfaces reaching broadband 90% relative diffraction efficiency. Nat. Commun. 14, 2544 (2023)

Miyata, M., Nakajima, M., Hashimoto, T.: High-sensitivity color imaging using pixel-scale color splitters based on dielectric metasurfaces. ACS Photonics 6, 1442–1450 (2019)

Miyata, M., Nakajima, M., Hashimoto, T.: Computational spectral imaging with metasurface-based, pixel-scale color splitters. The 10th International Conference on Metamaterials, Photonic Crystals and Plasmonics (META), 8220 (2019)

Miyata, M., Nemoto, N., Shikama, K., Kobayashi, F., Hashimoto, T.: Color splitting micro-metalenses for high-sensitivity color image sensors. Conference on Lasers and Electro-Optics (CLEO), FTu2M.5 (2021)

Miyata, M., Nemoto, N., Shikama, K., Kobayashi, F., Hashimoto, T.: Full-color-sorting metalenses for high-sensitivity image sensors. Optica 8, 1596–1604 (2021)

Bayer, B. E.: Color imaging array. U.S. patent 3,971,065 (20 July 1976)

Kudo, T., Nanjo, Y., Nozaki, Y., Nagao, K., Yamaguchi, H., Kang, W.-B., Pawlowski, G.: Pigmented photoresists for color filters. J. Photopolym. Sci. Technol. 9, 109–120 (1996)

Taguchi, H., Enokido, M.: Technology of color filter materials for image sensor. Int. Image Sens.Work., 34–37 (2011)

Ramanath, R., Snyder, W.E., Bilbro, G.L., Sander, W.A.: Demosaicking methods for Bayer color arrays. J. Electron. Imaging 11, 306–315 (2002)

Zhang, X., Brainard, D.: Bayesian color correction method for noncolorimetric digital image sensors. Color Imaging Conference. 12, 308 (2004)

Lam, E. Y., Combining gray world and retinex theory for automatic white balance in digital photography. International Symposium on Consumer Electronics (ISCE), pp 134–139 (2005)

Miyata, M., Takehara, H., Shikama, K., Nemoto, N., Ohta, J., Hashimoto, T.: Filter-free color image sensors with a full-color-sorting metalens array. Conference on Lasers and Electro-Optics (CLEO), FM1B.8 (2022)

Yun, S., Roh, S., Lee, S., Park, H., Lim, M., Ahn, S., Choo, H.: Highly efficient color separation and focusing in the sub-micron CMOS image sensor. IEEE International Electron Devices Meeting (IEDM), 30.1.1–30.1.4 (2021)

Lee, S., Roh, S., Choo, H., Yun, S.: Characteristics of image sensors with metaphotonic color-routing nanostructures and the rapid resolution-restoring demosaic algorithm. Conference on Lasers and Electro-Optics (CLEO), FW3C-8 (2023)

Acknowledgements

I thank Dr. Toshikazu Hashimoto, Mr. Naru Nemoto, Dr. Mitsumasa Nakajima, Dr. Kota Shikama, Mr. Fumihide Kobayashi (NTT Device Technology Laboratories), and Dr. Yoko Sogabe (NTT Computer and Data Science Laboratories) for their support and advice on research related to this review.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miyata, M. Dispersion-engineered metasurfaces for high-sensitivity color image sensors. Opt Rev 31, 290–298 (2024). https://doi.org/10.1007/s10043-024-00882-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10043-024-00882-8