Abstract

X-ray diffraction (XRD) is used for characterizing the crystal structure of molecular sieves after synthetic experiments. However, for a high-throughput molecular sieve synthetic system, the huge amount of data derived from large throughput capacity makes it difficult to analyze timely. While the kernel step of XRD analysis is to search peaks, an automatic way for peak search is needed. Thus, we proposed a novel semantic mask-based two-step framework for peak search in XRD patterns: (1) mask generation, we proposed a multi-resolution net (MRN) to classify the data points of XRD patterns into binary masks (peak/background). (2) Peak search, based on the generated masks, the background points are used to fit an n-order polynomial background curve and estimate the random noises in XRD patterns. Then we proposed three rules named mask, shape, and intensity to screening peaks from initial peak candidates generated by maximum search. Besides, a voting strategy is proposed in peak screening to obtain a precise peak search result. Experiments show that the proposed MRN achieves the state-of-the-art performance compared with other semantic segmentation methods and the proposed peak search method performs better than Jade when using f1 score as the evaluation index.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Molecular sieve is important in various aspects of industrial chemical reactions, such as methanol-to-olefins (MTO) conversion [1,2,3,4], methanol to dimethyl ether conversion [5, 6], propylene/propane separation [7], and nitrogen separation of natural gas [8]. While molecular sieves with different crystal structures have various catalytic performances for chemical reactions, it is required to find new types of molecular sieves that can provide economic benefits for the chemical plant. Benefiting from the combination of robotics, control, and computer science, high-throughput systems make it possible to conduct experiments efficiently and in parallel. Naturally, there stands a way of combining molecular synthesis with the high-throughput technique for an efficient molecular sieve search [9,10,11,12,13,14].

However, the large amount of experimental data brings challenges to the latter analysis which relies on experts. To lift the processing efficiency of the analyzing step, an automatic approach is needed.

In the analysis step, XRD patterns, an important analysis method for characterizing molecular sieve crystal structure, are applied to recognize the composition of products after synthesis. Peaks that appear in the XRD patterns reveal the subtle microstructure and the analysis seeks to extract the peak information from the XRD patterns. In general, the analysis flow of the XRD spectrum consists of three steps: background correction, peak search, and fitting peaks. Although works on the first part and last part are well-reported [15,16,17,18], there is little work in the area of peak search.

A general peak search routine is provided by the commercial software Jade. The rule-based peak search method of Jade uses a series of subjective rules for peak search. In this way, some parameters are changeable to adjust the result. However, the parameters cannot sometimes be adjusted to obtain satisfactory results. As well, marking the peaks manually offers a more precise way. In this situation, it takes several minutes to process one XRD pattern while an automatic algorithm needs only less than one second of processing time.

Motivated by this, we aim to develop a more efficient way for peak search. In contrast, machine learning methods which have few explicit changeable parameters are user-friendly. However, the dependency relationship between the model and the training samples makes it prone to overfitting. As well, in general, rule-based methods have high generalization ability but face more complicated parameters. Additionally, it is hard to extract proper rules sometimes. Thus, in our method, the machine learning method is first used for seeking complicated rules automatically. Then a series of simple rules are used to yield more precise results. Therefore, high generalization ability and few operation parameters can be obtained simultaneously.

The XRD peak search task could be simplified to a semantic segmentation task that separates the points on XRD patterns into binary classes: peak and background. Works on the segmentation task of X-ray images which are partly similar to ours have been reported [19, 20]. However, due to that XRD patterns are not in image format, there is little work in the area of XRD pattern segmentation. Hence, we have reviewed existing popular semantic segmentation methods. In the early stage of semantic segmentation, the full convolution network (FCN) [21] was proposed which was derived from the visual geometry group (VGG) net [22]. It substituted the fully connected layer of VGG net with a 1x1 convolution layer. FCN which consists of convolution layers only is a popular framework for semantic segmentation nowadays. Subsequently, considering that the sparse feature map was insufficient for the final segmentation result, DeepLabV1 [23] modified the stride of the convolution kernel from 2 to 1 in the latter layer of VGG net to achieve a dense feature map and introduced the conception of hole convolution which controls the receptive field. Then, DeepLabV2 [24] made a fusion of the hole convolution layer with different hole rates to integrate the influence of different receptive fields and reach a better performance compared with V1. DeepLabV3 [25] modified the hole rate of different hole convolution layer to avoid the situation in which the hole rate of different layers have a common factor. In this situation, the central information of hold is not used. Furthermore, the network derives from VGG, SegNet [26] proposed a new encoder–decoder framework. Then, based on SegNet, extra skip connections from the same level between the encoder and decoder were added and formed the Unet [27] which was named by the shape of its network architecture. The extra path for information transmission made a fine segmentation result. There remain two variants of Unet called Unet++ [28] and Unet3+ [29] which designed more complicated ways of assembling features from different levels.

Based on the semantic segmentation model, a rough mask that describes which area refers to a peak is generated. The benefits of the separation of background and peaks we could focus on those peak areas which show more important information. However, the binary mask is insufficient for peak search for the reason that accurate locations of peaks are not shown. Thus, extra refined rules are needed. In conclusion, to solving the problems that the recognizing accuracy of existing methods is unsatisfactory and it is had to configure the complex parameters, the main contributions of the proposed method are:

-

1.

We proposed a novel semantic mask-based two-step framework for peak search in XRD patterns. First, the mask generation step uses a semantic segmentation model to recognize the peak and background area, then the peak search step gives the precise peak location by screening the maximum candidates with mask, intensity, and shape screening rules.

-

2.

We proposed a multi-resolution net (MRN) for semantic segmentation, it ensures that both large and small peaks can be detected by considering the characteristics of XRD patterns at different resolutions.

Preliminaries

Considering the differences in semantic segmentation between XRD patterns with natural images, a brief description is given introducing the characteristics of the XRD patterns. Then, an extra reconstruction step is applied to normalize different XRD data to a uniform size. Subsequently, the basic segmentation methods and the specific task definition are explained. Finally, the loss function used for training segmentation models and the evaluation index are listed.

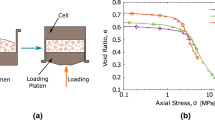

XRD pattern

An XRD pattern (Fig. 1) consists of continuous data pairs that contain two dimensions data of \(2\theta \) (scanning angle of X-ray diffractometer) and corresponding intensity. Compared with RGB images, XRD patterns vary along with only one independent dimension and have a single data channel. Therefore, they could be regarded as 1-dimensional grayscale images. Meanwhile, objects in the real world could be mapped to those steep peaks in the XRD pattern. However, actual objects share ambiguous outlines while peaks in the XRD pattern hold more regular shapes. In this way, the complexity of analyzing an XRD pattern is lower.

Basic semantic segmentation modelling methods

In general, a semantic segmentation task is: given an image, for each pixel output a classification result which refers to the specific object categories. and objects with dissimilar semantic meanings are separated. In this work, the first step of the peak search task could be defined as follows: for each data point in the XRD pattern, categorize to background/peak class. For the reason of simple, extensible structure, and excellent performance, we chose to improve on the Unet method. Besides, the Unet offers a proper structure to extract multi levels of features which could be integrated with multi-resolution mechanism and made it compatible to the proposed MRN.

Figure 2 shows a 3-depth Unet in which the depth is a hyperparameter that determines the model structure. Here the number of different feature levels from top to bottom indicates the depth and features in the same level are the same size. Subsequently, the whole network could be divided into two parts encoder and decoder respectively. Besides, there remain extra skip connections (grey arrows) between the encoder and decoder. In the encoding period, a given input passes from the top to the bottom and the feature size decreases as the channels increase. Then, during the decoding step, the bottom feature upsamples into the original input size step by step. When the feature passes to a different layer of the decoder, it receives extra semantic information from the encoder by the skip connection. Finally, a pixel-wise segmentation result is produced.

Based on Unet, the Unet++ modifies the passing routine of the skip connections and forms a nested architecture, as shown in Fig. 3. Compared with Unet, Unet++ considers a more complicated topological structure that generates a dense hierarchical net. Besides, extra supervision is added from the first feature level. The addition of the latter two outputs in the first feature level forms the final output. Benefits from the supervision of different information flow, Unet++ achieved better performance compared with Unet.

The Unet3+ was also inspired by changing information flow (as shown in Fig. 4). To be specific, it passed all levels of the feature to every layer of the decoder instead of passing the same level of feature in the Unet. In this way, Unet3+ is fully connected.

Evaluation index and loss function

Before training a semantic segmentation model, the loss function and the evaluation index should be determined. In our method, it is required to ensure precision and recall same time. Therefore, choosing the dice coefficient as the evaluation index is suitable for first mask generating step. Hence, to keep the consistency with model training and evaluation, we used dice loss as the loss function instead of cross-entropy loss. Our peak locating work could be defined as a binary classification task (\(N=2\)). The following shows that the formula of the dice coefficient and dice loss, where \(Y_{i}\) indicates the label and \({\widehat{Y}}_{i}\) refers the predicted value, that is,

In addition, we used the f1 score to evaluate the performance of the whole two-step peak search method. The following shows that the formula of f1 score, where the TP, FN, FP indicates true positive, false negative, and false positive samples respectively, that is,

Semantic mask-based two-step peak search

Before the processing period, we applied a simple interpolation for reconstructing the XRD pattern to a uniform size (as shown in Algorithm 1).

Then, the proposed method is shown in Fig. 5. The processing flow of peak search consists of two steps. First, recognizing the peak area, a simple but hardly described task with mathematics is completed by a semantic segmentation network in which rules are learned implicitly and automatically. Then, a background curve is reconstructed from those data points which are marked with 0. Besides, the background data points are used to estimate the distribution of noise so that truly valid peaks can be detected from noisy data with a threshold. Finally, a maximum search is used to generate initial candidates of peak. Afterward, a set of screening rules including intensity, shape, and mask are used to remove incorrect peaks.

Mask generation

Inspired by that objects could be recognized in images with different resolutions, the introduction of extra perspectives with lower-resolution images might be positive for semantic segmentation. Therefore, an integration framework, which assembles several subnets with diminishing resolutions, is proposed and named as a multi-resolution net (MRN).

When processing a visual image, people are preferred with global information such as the outline so that the texture information is ignored. Therefore, peaks inside an XRD pattern could be recognized even in very small resolution for the reason that the outline information is preserved. On the other hand, machine learning methods are sensitive to local information, so details demonstrated in high-resolution images would disturb the final segmentation result and might cause confusion.

To solve this problem, reducing the resolution of the input image might force the network to catch more global information, and a multi-resolution mechanism, which considers the perspective of different resolutions comprehensively in semantic segmentation, is introduced. The MRN consists of several subnets with receiving inputs of diminishing resolution as shown in Fig.5a. Further, the subnet structure is not fixed. When an input to be processed is given, the first subnet of MRN would output a segmentation result with the same size as the origin input. Subsequently, the resolution of the last input would shrink 2 times and feed as the input of the next subnet. Due to the resolution reduction between different subnets, an extra upsample step is added to restore the corresponding output of every subnet to the original input size.

The final output is then:

There remains a slight difference between our task and the image semantic segmentation task. To be specific, considering the XRD pattern as a 2-D image is not necessary for the reason that only the point on the curve is truly valid. Therefore, the blank areas are redundant and cause extra computation expense. Thus, the XRD pattern is considered as a 1-D image and all 2-D convolution operations in traditional image semantic segmentation were converted to 1-D in MRN.

While the framework of MRN has been shown in the last section, the optimal subnet number, which refers to a specific MRN structure, should be determined. There exists a limitation of subnet number along with the reduction of resolution. Networks cannot acquire any useful information in a one-point pattern at the extreme. When a large enough subnet number is given, the subnet added would not benefit the final segmentation result for the reason that the lower resolution input of the latter subnet offers no valid information. Therefore, an algorithm to determine the optimal number of subnets is proposed (as shown in Algorithm 2). The threshold to estimate the convergence of model performance could be set with demand. A small threshold leads to a more complicated route for model searching, while more computation costs would be spent.

Peak search

When the mask is generated, the background data points can be separated from the original data \({{\varvec{X}}}\). Then a 10-order polynomial is applied to these data points fitting a background curve \({{\varvec{B}}}\) (as shown in Fig. 6). The background correction removes the influence of background disturbance from the XRD pattern, and the corrected data is given (as shown in Eq. 7). Subsequently, the residual error of the background can be computed. Considering the distribution of noise in XRD data is not exactly a normal distribution, we used the "\(p\%\)-rule" rather than "\(3{\sigma }\)-rule". That is, by sorting the absolute value of residual error from smallest to largest, the cut-off value contains p% smaller data is considered as the threshold \({{\varvec{t}}}\).

Based on corrected XRD patterns, a Gaussian filter \({{\varvec{F}}}\) (given in Eq.8) with length \({{\varvec{l}}}\) is used for data smoothing. Where the \(\pmb {\sigma }\) is a changeable parameter that controls the shape of the Gaussian filter. Then, a maximum search step is used to generate initial peak candidates \({{\varvec{P}}}\) from smoothed XRD pattern \({{\varvec{X}}}_{{\textbf {s}}}\).

Then, we proposed two rules of mask and intensity for screening peak candidates (as shown in Eqs. 11 and 12 respectively). Where \({{\varvec{M}}}\) refers to the mask generated by first step and \({{\varvec{S}}}\), \({{\varvec{S}}}^{'}\) refers to the unscreened set and screened set respectively.

Besides, the shape factor is proposed ensuring that detected peaks keep the trend that increases on the left and then decreases on the right. Based on shape factor \({{\varvec{S}}}_{{{\varvec{f}}}}\), the shape screening rule is given as follows, where \({{\varvec{sp}}}\) indicates the threshold for screening and \({{\varvec{l}}}_{{{\varvec{w}}}}\) refers to the length of detecting window.

The final peak search result used a voting strategy (as shown in Algorithm 3) to unite three screening rules.

Experiments and discussion

Data sets for semantic segmentation

XRD Data used in this work derives from the cumulative experiments on a 48-channel high-throughput molecular sieve synthesis and characterization system. This system consists of eight hardware units, including solid weighing, sol preparation, crystallization reaction, separation and washing, atmosphere treatment, tablet pressing and screening, XRD characterization, SEM characterization, and nine units of a dedicated executive software and database system.

The X-ray diffractometer used for characterization is PANalytical XṔert PRO. Since Cu K\(\alpha \)2 was removed by a monochromator, Cu K\(\alpha \)1 radiation was applied. The voltage and the current were 40 kV and 40 mA, respectively.

The whole data sets used for semantic segmentation contain 2222 samples. Each sample consists of a normalized XRD pattern and a point-wise segmentation label. The data point recognized as part of one peak was set with label 1 and the background points were set with 0. Before model building, each XRD pattern was reconstructed to the data size of 2048. Finally, to obtain a model with great generalization performance, we used 5-fold cross-validation for model training.

Peak search result compared to Jade

32 XRD samples are used to evaluate the performance of different peak search methods. Of these samples, half have been used in the training procedure of mask generation. The rest parts are new samples which are obtained from Jade. In contrast, manually marked peaks are used as the label. While the interior algorithm of Jade has many changeable parameters, we select the most important parameter, length of slide window \({{\varvec{w}}}\), as a variable (set with 7, 11, and 15) for a more comprehensive comparison and applied the default parameter configuration to the rest. Table 1 shows that our method performs better than Jade.

As shown in Fig. 7, the intuitive comparison is given. The result shows that our method preserves the correct peaks as much as possible and tiny peaks can be detected as well in the situation of few mistakes. To investigate the generalization ability, we computed f1 scores of the two parts of samples in which one contains samples that had been used in MRN and the other has not. Results are 85.37 (used samples) and 90.17 (unused samples). It indicates that even new unseen data can achieve a satisfactory result which makes it possible for general use.

In addition to testing on new samples obtained from Jade, we collected 16 XRD patterns of different types of molecular sieves from relative references. While the original data of XRD pattern are not uploaded with corresponding papers, we had reconstructed XRD patterns from the demonstrated image. In this way, the resolution of reconstructed data is low. Even so, Fig. 8 has shown the great performance of our method in qualitative analysis (the rest can be found in Appendix 6). It is ensured that our method can be applied to most occasions.

Effect of changeable parameters

Although changeable parameters in our methods are few. The parameter configuration is still important to peak search. Changeable parameters in our method contains, \(\pmb {\sigma }\), \({{\varvec{p}}}\), and \({{\varvec{sp}}}\). As well, the threshold \({{\varvec{t}}}\) for intensity screening is a dependent variable to \({{\varvec{p}}}\) so that it is not included. Besides, the length of the filter and the detecting window for shape factor computation are changeable as well, but the influence on search results by its variation is slight. Therefore, the experiments are not shown and its value is set with 5 both of all.

For a comprehensive comparison, the \(\pmb {\sigma }\) is set with [1, 2, 3, 4, 5] and the \({{\varvec{sp}}}\) is set with [0.1, 0.3, 0.6, 0.7, 0.9]. Besides, compared to the "\(3\sigma \)-rule" in a \(N(\mu ,\sigma )\) gaussian distribution, the \({{\varvec{p}}}\) is set with [0.66, 0.96, 0.99] which refers to the corresponding proportion of containing data.

Results are shown in Table 2. It indicates that a large \({{\varvec{sp}}}\) ensures the geometric shape of peak in the neighbouring area. However, a large enough value of \({{\varvec{sp}}}\) which refers to a strong constraint eliminates the valid peaks as well so that 0.7 is proper. Then, a large \({{\varvec{p}}}\) is easily making mistakes with tiny peaks and 0.96 is proper to eliminate the influence of noise and preserve tiny peaks same time.

Exploration on the screening strategy

We explored the different combinations of screening rules that form different screening strategies. When more than one of the screening rules is used, the flow is sequential. Besides, when both three rules are used, the voting strategy (shown in Algorithm 3) offers a different way compared to the sequential process (the voting strategy is marked with * which distinguishes it from the sequential strategy). Results are shown in Table 3. It indicates that, compared with a single rule, the joint of two rules increases the model performance. Besides, the voting strategy performs the best on most occasions. The reason for the unsatisfactory result of the sequential strategy could be that the continuous screening rules decrease the number of candidates gradually. Once the number of screening rules is large, the remained candidates are few. Therefore, a weak constraint is beneficial to maintain the number of candidates (eg. \(\sigma =1, p=0.66, sp=0.7\)). On the other hand, strong constraint is preferred for voting strategy guaranteeing the quality of candidates produced by different screening rules.

Determining the subnets structure

The first mask generating step is the kernel of the proposed peak search method, a high-quality mask is not only beneficial for screening peaks but also offers more clean background. Thus, we had compared the proposed MRN with other semantic segmentation methods. Table 4 shows the overall model performance comparison between MRN and other semantic segmentation methods. Results show that MRN achieves the best performance. Further, to explore the influence caused by the subnet structure, experiments configuring the MRN with 13 types of subnet structure have been made. Meanwhile, the MRN with 1 subnet refers to the corresponding original method. The results are shown in Table 5. In terms of the dice coefficient, the best performance (84.25) was achieved with the model assembled by 7 3-depth Unet. For each type of subnet structure, the best model performance is shown in bold.

It indicates that, when choosing the Unet-like models as the subnet, the MRN enhances model performance compared with basic methods. In addition, the integration of SegNet, which shows a similar structure compared with Unet, has increased model performance as well. However, compared with Unet, SegNet lacks skip connections which passes extra semantic information during the decoding period. Therefore, the enhancement of MRN-SegNet is weak in terms of model performance.

The integration of Deeplabv3 performs inversely as model performance decreases with the subnet number. What causes this phenomenon could be explained by the architecture of DeepLabV3 (as shown in Fig. 9.). For a better understanding, the DeepLabV3 can be divided into 2 parts an encoder and a decoder. Due to the simplicity of the decoder which only uses the deepest feature, the following subnets with lower-resolution input would lose much information when encoding and perform poorly during the decoding period. In contrast, although the information reduction exists in the encoder of the Unet-like model as well, the skip connection between the decoder and encoder would offer extra information to give a better segmentation result.

As shown in Table 5, an Unet-like model is effective in being chosen as the subnet in Multi-Resolution Net. While the optimal dice coefficient for MRN-Unet++ and MRN-Unet3+ models are 84.23 and 84.19 respectively which are quite close, choosing the Unet as the basic model is suitable which offers a tradeoff between model performance and model size (Table 6 shows the parameters a number of the different MRN models).

Exploration of the multi-resolution mechanism

To explore the multi-resolution mechanism, we have compared MRN with the basic Unet-like method. In this perspective, the extra subnet in MRN could be regarded as adding one depth to the first subnet. Therefore, one MRN with n m-depth Unet has the same number of feature levels as a (m+n-1)-depth Unet. Figure 10 shows a MRN with 3 2-depth Unet subnets. The network in Fig. 10 can be seen as a variant of 4-depth Unet.

Table 7 shows the experimental results of MRN (here the first number means the number of subnets and the second number means the depth of the subnet) with Unet-like models. In the situation of the same feature level number, MRN-Unet and MRN-Unet3+ show better dice coefficient compared to the corresponding original method. Meanwhile, although MRN-Unet++ performs worse compared to Unet++ when the feature levels are 3 and 4, the best result 83.74 is achieved by the MRN model.

Differences in model performance between MRN and Unet-like models derive from the operation of the resolution reduction. Rather than deducing deep features from the same input in Unet, deep features are derived from separated inputs in MRN. In this way, each subnet is independent of the others and the deeper feature extracted by the latter subnet is unaffected by previous subnets. Then, although the pooling operation in Unet could be regarded as resolution reduction, deep features can obtain the missed information from other channels of the shallow layer. As a result, the information reduction of Unet is soft and incomplete.

From this perspective, the integration of a shallow depth subnet performs better than adding the depth of the Unet-like model directly. In terms of the advantages in model performance, MRN holds a smaller model size as well. The model size of MRN increases with subnet number linearly (0.7M, 1.3M, 2.0M). However, the model size of the Unet model grows exponentially (0.7M, 2.7M, 10.8M) with depth.

Subnets number determination of MRN

In addition to the subnet structure, the number of subnets affects the model performance as well. Figure 11 shows the uptrend of model performance with subnets number. One attractive point is that although MRN with different subnet structures but the same method (changeable depth for Unet-like models) shows different model performance when the subnet number is set as 1, the model performance of MRN would reach a similar level along with the increment of subnets number. This is derived from the convergence of model performance and the converge point is only affected by the internal constitute of datasets.

Once the ratio of resolution reduction reaches the width of the peak, the truly valid information about the peaks is eliminated and extra subnets would not benefit the final segmentation result which leads to the convergence of model performance. Therefore, the proposed automatic framework for searching optimal subnet numbers is effective. In our work, it is suitable to set the threshold of convergence detecting as 0.5.

In conclusion, the model performance of MRN was mainly affected by the number of subnets and holds a point of convergence. For the reason of the existing convergence point, MRN with a shallow depth subnet can obtain similar model performance compared with MRN with a higher depth subnet. Therefore, a shallow depth subnet is sufficient for MRN and decreases the model size as well. Table 8 shows the parameters amount of different models. To sum up, a 2-depth subnet holds satisfactory model performance and a small model size at the same time.

Validation of multi-resolution mechanism

Figure 12 shows the mask generated by different MRN models. It indicates that the addition of extra subnets results in a more accurate result which eliminates the wrong pattern compared with a single subnet. Further, the segmentation results derived from different subnets of one MRN are listed, as shown in Fig. 13. It shows that the subnet that received higher resolution input was sensitive to tiny peaks which could be noise sometimes. On the other hand, subnets with lower-resolution input are more activated with large peaks. In this way, the MRN considers the perspectives of different resolutions, and peaks with various sizes could be detected simultaneously.

Conclusion

In this paper, the two-step workflow offers a new way for peak search in XRD patterns. By introducing the semantic segmentation framework, the implicit rule of distinguishing peak area and background area in an XRD pattern is learned automatically. In addition, the multi-resolution mechanism in MRN has shown benefits to model performance and produces a more accurate segmentation result. Subsequently, the generated mask makes it possible to estimate the noise distribution precisely so that peaks can be separated from noise data. Finally, three types of screening rules are proposed and experiments have shown their validity. Besides, the voting strategy for joint screening rules gives a better result than the sequential process. In conclusion, our method has fewer parameters to adjust and performs better on peak search than existing methods. In latter works, we will consider the possibility of using instance segmentation model for peak searching, in this way, a end-to-end and non-parametric way for peak seatch is offered. Besides, it should be noted that our framework is not only compatible with XRD patterns. Theoretically, considering the similarity between XRD patterns and other spectrum-based methods (eg. near-infrared spectrum), the normal form of generating a mask first and then specifying fine-grained tasks have the potential to be applied to other spectrum-based methods and the possibility would be investigated in the latter work.

Data Availability

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

References

Dahl IM, Kolboe S (1993) On the reaction mechanism for propene formation in the mto reaction over sapo-34. Catal Lett 20:329–336

Chae HJ, Song YH, Jeong KE, Kim CU, Jeong SY (2010) Physicochemical characteristics of zsm-5/sapo-34 composite catalyst for mto reaction. J Phys Chem Solids 71:600–603

Li J et al (2011) Conversion of methanol over h-zsm-22: the reaction mechanism and deactivation. Catal Today 164:288–292

Wen M, Ren L, Zhang J, Jiang J, Wu P (2021) Designing sapo-18 with energetically favorable tetrahedral si ions for an mto reaction. Chem Commun 57

Pop G, Bozga G, Ganea R, Natu N (2009) Methanol conversion to dimethyl ether over h-sapo-34 catalyst. Ind Eng Chem Res 48:7065–7071

Xiong Z, Zhan E, Li M, Shen W (2020) Dme carbonylation over a hsuz-4 zeolite. Chem Commun 56

Ma X, Lin BK, Wei X, Kniep J, Lin YS (2013) Gamma-alumina supported carbon molecular sieve membrane for propylene/propane separation. Ind Eng Chem Res 52:4297–4305

Nandanwar SU, Corbin DR, Shiflett MB (2020) A review of porous adsorbents for the separation of nitrogen from natural gas. Ind Eng Chem Res 59:13355–13369

Newsam JM, Bein T, Klein J, Maier WF, Stichert W (2002) High throughput experimentation for the synthesis of new crystalline microporous solids. Cheminform 48:355–365

Serra JM, Guillon E, Corma A (2005) Optimizing the conversion of heavy reformate streams into xylenes with zeolite catalysts by using knowledge base high-throughput experimentation techniques. J Catal 232:342–354

Corma A, Diaz-Cabanas MJ, Jorda JL, Martinez C, Moliner M (2006) High-throughput synthesis and catalytic properties of a molecular sieve with 18-and 10-member rings. Nature 443:842–845

Janssen KP, Paul JS, Sels BF, Jacobs PA (2007) High-throughput preparation and testing of ion-exchanged zeolites - sciencedirect. Appl Surf Sci 254:699–703

Willhammar T et al (2017) High-throughput synthesis and structure of zeolite zsm-43 with two-directional 8-ring channels. Inorg Chem 56:8856–8864

Chen X et al (2023) High-throughput synthesis of alpo and sapo zeolites by ink jet printing. Chem Commun 59:2157–2160

Tan H-W, Brown SD (2001) Wavelet hybrid direct standardization of near-infrared multivariate calibrations. J Chemom J Chemom Soc 15:647–663

Mazet V, Carteret C, Brie D, Idier J, Humbert B (2005) Background removal from spectra by designing and minimising a non-quadratic cost function. Chemometr Intell Lab Syst 76:121–133

Zhao J, Lui H, Mclean DI, Zeng H (2007) Automated autofluorescence background subtraction algorithm for biomedical raman spectroscopy. Appl Spectrosc 61:1225–1232

Baek SJ, Park A, Shen A, Hu J (2011) A background elimination method based on linear programming for raman spectra. J Raman Spectrosc 42:1987–1993

Du W, Shen H, Fu J (2021) Automatic defect segmentation in x-ray images based on deep learning. IEEE Trans Industr Electron 68:12912–12920

Gu B, Ge R, Chen Y, Luo L, Coatrieux G (2021) Automatic and robust object detection in x-ray baggage inspection using deep convolutional neural networks. IEEE Trans Industr Electron 68:10248–10257

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation, 3431–3440

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Chen L.-C, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2014) Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv preprint arXiv:1412.7062

Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2018) Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intell 40:834–848

Chen L.-C, Papandreou G, Schroff F, Adam H (2017) Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587

Badrinarayanan V, Kendall A, Cipolla R (2017) Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell 39:2481–2495

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation, 234–241

Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J (2018) Unet++: A nested u-net architecture for medical image segmentation 3–11

Huang H et al. (2020) Unet 3+: A full-scale connected unet for medical image segmentation, 1055–1059

Liang J et al (2021) Synthesis of al-bec zeolite as an efficient catalyst for the alkylation of benzene with 1-dodecene. Microporous Mesoporous Mater 328:111448. https://www.sciencedirect.com/science/article/pii/S1387181121005746

Li J et al (2015) Synthesis of nh3-scr catalyst sapo-56 with different aluminum sources. Procedia Eng 121:967–974. https://www.sciencedirect.com/science/article/pii/S1877705815028921

Dorset DL, Kennedy GJ (2004) Crystal structure of mcm-65: an alternative linkage of ferrierite layers. J Phys Chem B 108:15216–15222. https://doi.org/10.1021/jp040305q

Chiang C-M, Wang I, Tsai T-C (2016) Synthesis and characterization of sapo-37 molecular sieve. Arab J Sci Eng 41:2257–2260. https://doi.org/10.1007/s13369-015-2023-0

Liu X et al (2017) Identification of double four-ring units in germanosilicate itq-13 zeolite by solid-state nmr spectroscopy. Solid State Nuclear Magn Resonance 87:1–9. https://www.sciencedirect.com/science/article/pii/S0926204017300449

Yang X, Camblor MA, Lee Y, Liu H, Olson DH (2004) Synthesis and crystal structure of as-synthesized and calcined pure silica zeolite itq-12. J Am Chem Soc 126:10403–10409. https://doi.org/10.1021/ja0481474

Burel L, Kasian N, Tuel A (2014) Quasi all-silica zeolite obtained by isomorphous degermanation of an as-made germanium-containing precursor. Angew Chem Int Ed 53:1360–1363. https://doi.org/10.1002/anie.201306744

Pinilla-Herrero I, Gómez-Hortigüela L, Márquez-Álvarez C, Sastre E (2016) Unexpected crystal growth modifier effect of glucosamine as additive in the synthesis of sapo-35. Microporous Mesoporous Mater 219:322–326. https://www.sciencedirect.com/science/article/pii/S1387181115004424

Piccione PM, Davis ME (2001) A new structure-directing agent for the synthesis of pure-phase zsm-11. Microporous Mesoporous Mater 49:163–169. https://www.sciencedirect.com/science/article/pii/S1387181101004140

Lee S. H et al. (2000) Synthesis of zeolite zsm-57 and its catalytic evaluation for the 1-butene skeletal isomerization and n-octane cracking 196, 158–166

Foster M, Treacy M, Higgins JB, Rivin I, Randall KHJJoAC (2010) A systematic topological search for the framework of zsm-10 38:1028–1030

Willhammar T et al (2017) High-throughput synthesis and structure of zeolite zsm-43 with two-directional 8-ring channels. Inorg Chem 56:8856–8864. https://doi.org/10.1021/acs.inorgchem.7b00752

Muraza O et al (2014) Selective catalytic cracking of n-hexane to propylene over hierarchical mtt zeolite. Fuel 135:105–111. https://www.sciencedirect.com/science/article/pii/S0016236114006115

Dorset DL, Kennedy GJ (2005) Crystal structure of mcm-70: a microporous material with high framework density. J Phys Chem B 109:13891–13898. https://doi.org/10.1021/jp0580219

Turrina A et al (2017) Sta-20: an abc-6 zeotype structure prepared by co-templating and solved via a hypothetical structure database and stem-adf imaging. Chem Mater 29:2180–2190. https://doi.org/10.1021/acs.chemmater.6b04892

Jamil AK, Muraza O (2016) Facile control of nanosized zsm-22 crystals using dynamic crystallization technique. Microporous Mesoporous Mater 227:16–22 . https://www.sciencedirect.com/science/article/pii/S1387181116000925

Acknowledgements

This work was supported by National Natural Science Foundation of China (Basic Science Center Program: 61988101), National Natural Science Foundation of China (62173145), the Shanghai Committee of Science and Technology, China (Grant No.22DZ1101500), Major Program of Qingyuan Innovation Laboratory (Grant No. 00122002), Fundamental Research Funds for the Central Universities and Shanghai AI Lab.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Peak search results of reference samples

Appendix A Peak search results of reference samples

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wei, Z., Peng, X., Du, W. et al. Semantic mask-based two-step approach: a general framework for X-ray diffraction peak search in high-throughput molecular sieve synthetic system. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01396-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40747-024-01396-1